July 20, 2018

by Oleg Dzhimiev

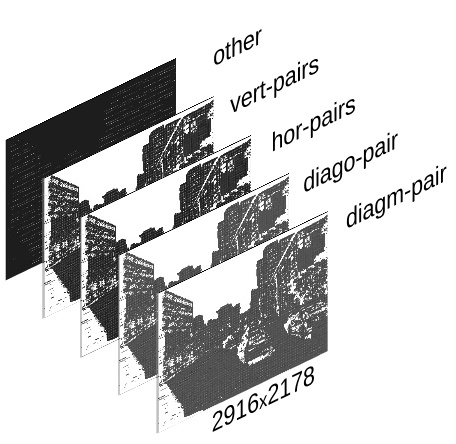

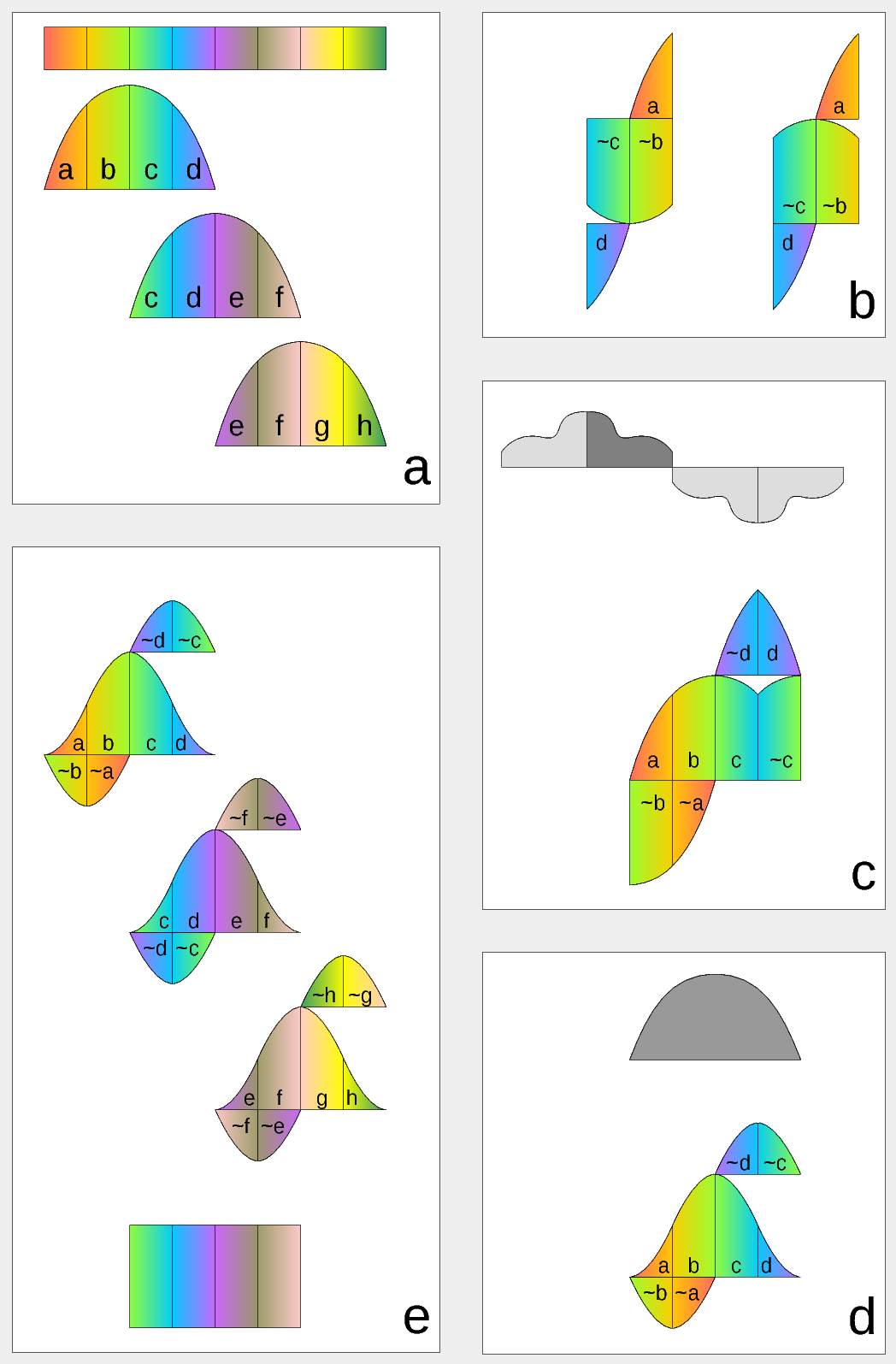

Fig.1 TIFF image stack

The input is a <filename>.tiff – a TIFF image stack generated by ImageJ Java plugin (using bioformats) with Elphel-specific information in ImageJ written TIFF tags.

Reading and formatting image data for the Tensorflow can be split into the following subtasks:

- convert a TIFF image stack into a NumPy array

- extract information from the TIFF header tags

- reshape/perform a few array manipulations based on the information from the tags.

To do this we have created a few Python scripts (see python3-imagej-tiff: imagej_tiff.py) that use Pillow, Numpy, Matplotlib, etc..

Usage:

~$ python3 imagej_tiff.py <filename>.tiff

It will print header info in the terminal and display the layers (and decoded values) using Matplotlib.

(more…)

June 20, 2018

by Olga Filippova

Elphel booth at CVPR 2018 Expo

Elphel is presenting at CVPR 2018 EXPO: Long Range Passive 3D Reconstruction System, providing NN with custom training sets, enabling realtime 3D-aware machine learning systems.

Please visit us at CVPR, Booth 132.

You can also find our presentation and related articles on wiki page https://wiki.elphel.com/wiki/CVPR2018

-

-

Gnu and Open Hardware are here

-

-

Andrey and Oleg at the booth132

-

-

Elphel Presentation at CVPR 2018 Expo

-

-

getting ready for the busy day at CVPR 2018

-

-

Visitors at the Booth 132

-

-

creating 3-D model of the CVPR 2018 Expo

May 6, 2018

by Andrey Filippov

Figure 1. Aircraft positions during descent captured with the quad stereo camera. Each animation frame corresponds to the available 3-D model.

While we continue to work on the multi-sensor stereo camera hardware (we plan to double the number of sensors to capture single-exposure HDR image sets) and develop code to get the ground truth data for the CNN training, we had some fun testing the camera for capturing aircraft position in 3-D space. Completely passive method, of course.

We found a suitable spot about 2.5 km from the beginning of the runway 34L of the Salt Lake City international airport (exact location is shown in the model viewer) so approaching aircraft would pass almost over our heads. With the 60°(H)×45°(V) field of view of the camera aircraft are visible when they are 270 m away horizontally.

(more…)

April 20, 2018

by Oleg Dzhimiev

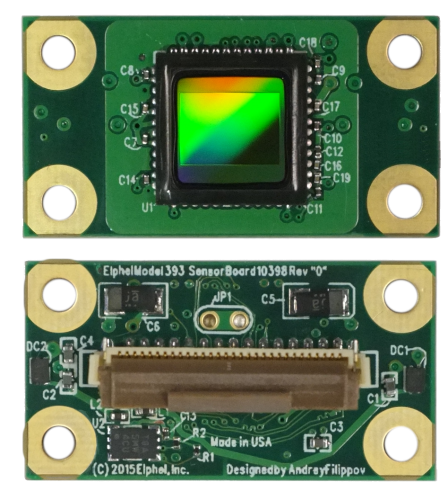

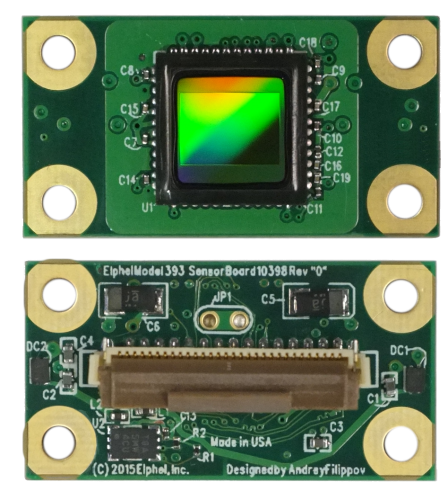

Fig.1 MT9F002

MT9F002

This post briefly covers implementation of a driver for On Semi’s MT9F002 14MPx image sensor for 10393 system boards – the steps are more or less general. The driver is included in the latest software/firmware image (20180416). The implemented features are programmable:

- window size

- horizontal & vertical mirror

- color gains

- exposure

- fps and trigger-synced ports

- frame-based commands sequence allowing to change settings of any image up to 16 frames ahead (didn’t need to be implemented as it’s the common part of the driver for all sensors)

- auto cable phase adjustment during init for cables of various lengths

(more…)

March 20, 2018

by Andrey Filippov

Figure 1. Dual quad-camera rig mounted on a car

Following the plan laid out in the earlier post we’ve built a camera rig for capturing training/testing image sets. The rig consists of the two quad cameras as shown in Figure 1. Four identical Sensor Front Ends (SFE) 10338E of each camera use 5 MPix MT9P006 image sensors, we will upgrade the cameras to 18 MPix SFE later this year, the circuit boards 103981 are in production now.

(more…)

February 5, 2018

by Andrey Filippov

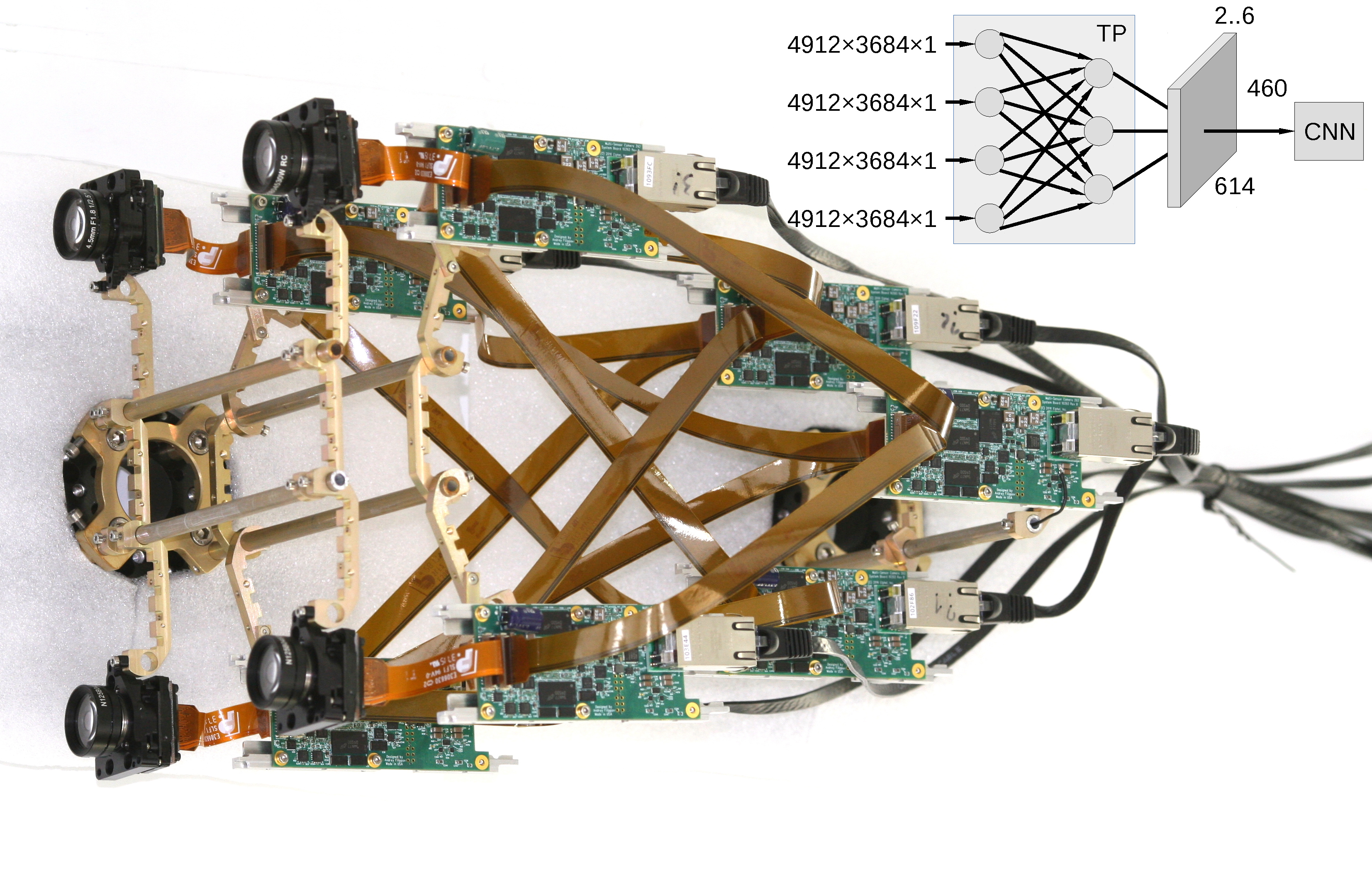

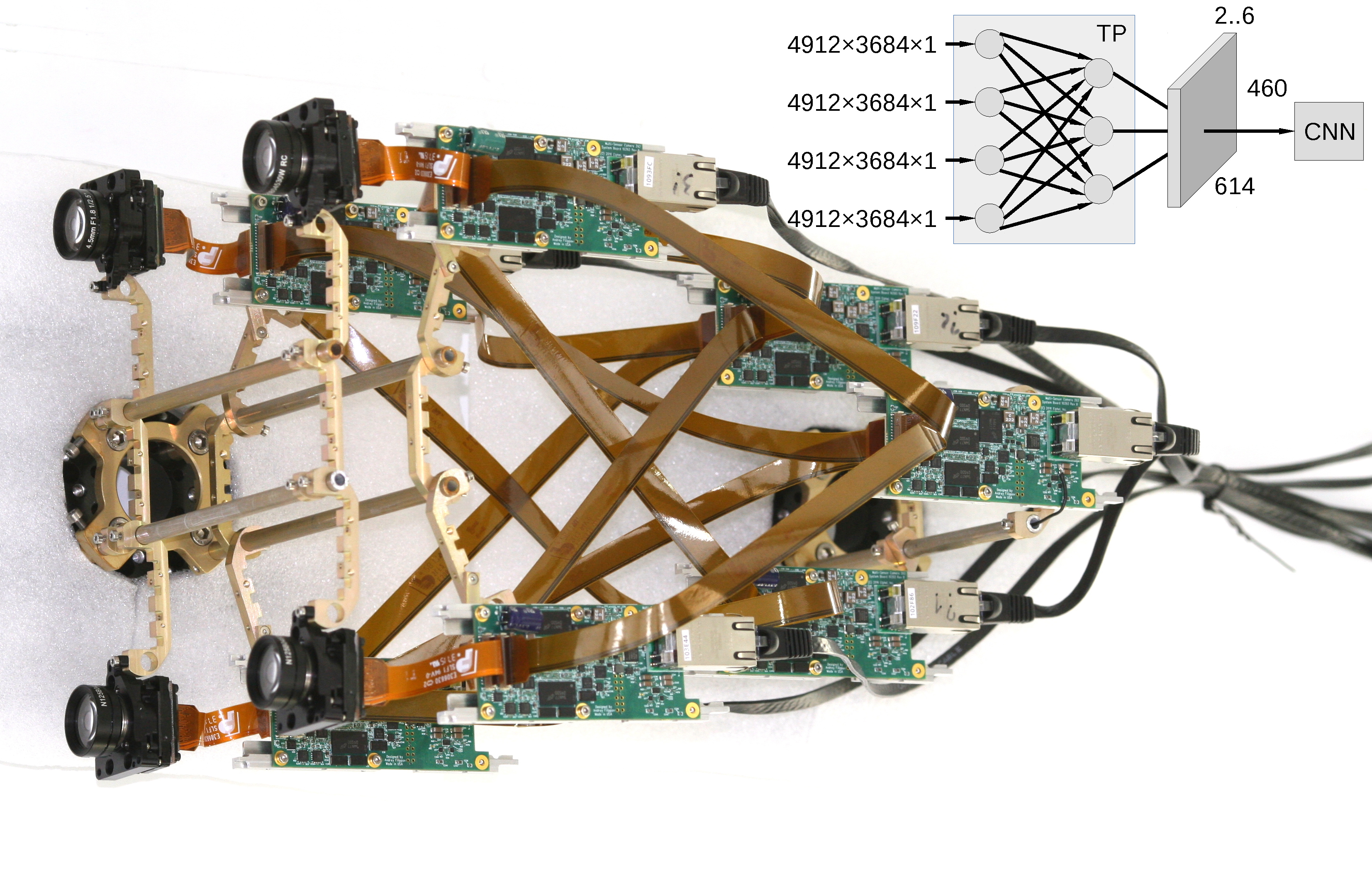

Figure 1. Multi-board setup for the TP+CNN prototype

Featured on Image Sensors World

This article describes our next steps that will continue the year-long research on high resolution multi-view stereo for long distance ranging and 3-D reconstruction. We plan to fuse the methods of high resolution images calibration and processing, already emulated functionality of the Tile Processor (TP), RTL code developed for its implementation and the Convolutional Neural Network (CNN). Compared to the CNN alone this approach promises over a hundred times reduction in the number of input features without sacrificing universality of the end-to-end processing. The TP part of the system is responsible for the high resolution aspects of the image acquisition (such as optical aberrations correction and image rectification), preserves deep sub-pixel super-resolution using efficient implementation of the 2-D linear transforms. Tile processor is free of any training, only a few hyperparameters define its operation, all the application-specific processing and “decision making” is delegated to the CNN.

(more…)

January 30, 2018

by Oleg Dzhimiev

Photo Finish: all cars driving in the same direction effect

Since 2005 and the older 333 model, Elphel cameras have a Photo Finish mode. First, it was ported to 353 generation, and then from 353 to 393 camera systems.

In this mode the camera samples scan lines and delivers composite images as video frames. Due to the Bayer pattern of the sensor the minimal sample height is 2 lines. The max fps for the minimal sample height is 2300 line pairs per second. The max width of a composite frame can be up to 16384px (is determined by WOI_HEIGHT). A sequence of these frames can be simply joined together without any missing scan lines.

Current firmware (

20180130) includes a photo finish demo:

http://<camera_ip>/photofinish

A couple notes for 393 photo finish implementation:

- works in JP4 format (COLOR=5). Because in this format demosaicing is not done it does not require extra scan lines, which simplified fpga’s logic.

- fps is controlled:

- by exposure for the sensor in the freerun mode (TRIG=0, delivers max fps possible)

- by external or internal trigger period for the sensor in the snapshot mode (TRIG=4, a bit lower fps than in freerun)

See our wiki’s

Photo-finish article for instructions and examples.

January 8, 2018

by Andrey Filippov

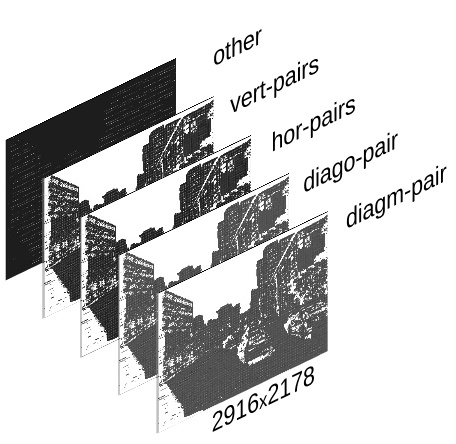

This post continues discussion of the small tile space-variant frequency domain (FD) image processing in the camera, it demonstrates that modulated complex lapped transform (MCLT) of the Bayer mosaic color images requires almost 3 times less computational resources than that of the full RGB color data.

“Small Tile” and “Space Variant”

Why “small tile“? Most camera images have short (up to few pixels) correlation/mutual information span related to the acquisition system properties – optical aberrations cause a single scene object point influence a small area of the sensor pixels. When matching multiple images increase of the window size reduces the lateral (x,y) resolution, so many of the 3d reconstruction algorithms do not use any windows at all, and process every pixel individually. Other limitation on the window size comes from the fact that FD conversions (Fourier and similar) in Cartesian coordinates are shift-invariant, but are sensitive to scale and rotation mismatch. So targeting say 0.1 pixel disparity accuracy the scale mismatch should not cause error accumulation over window width exceeding that value. With 8×8 tiles (16×16 overlapped) acceptable scale mismatch (such as focal length variations) should be under 1%. That tolerance is reasonable, but it can not get much tighter.

What is “space variant“? One of the most universal operations performed in the FD is convolution (also related to correlation) that exploits convolution-multiplication property. Mathematically convolution applies the same operation to each of the points of the source data, so shifted object of the source image produces just a shifted result after convolution. In the physical world it is a close approximation, but not an exact one. Stars imaged by a telescope may have sharper images in the center, but more blurred in the peripheral areas. While close (angularly) stars produce almost the same shape images, the far ones do not. This does not invalidate convolution approach completely, but requires kernel to (smoothly) vary over the input images [1, 2], makes it a space-variant kernel.

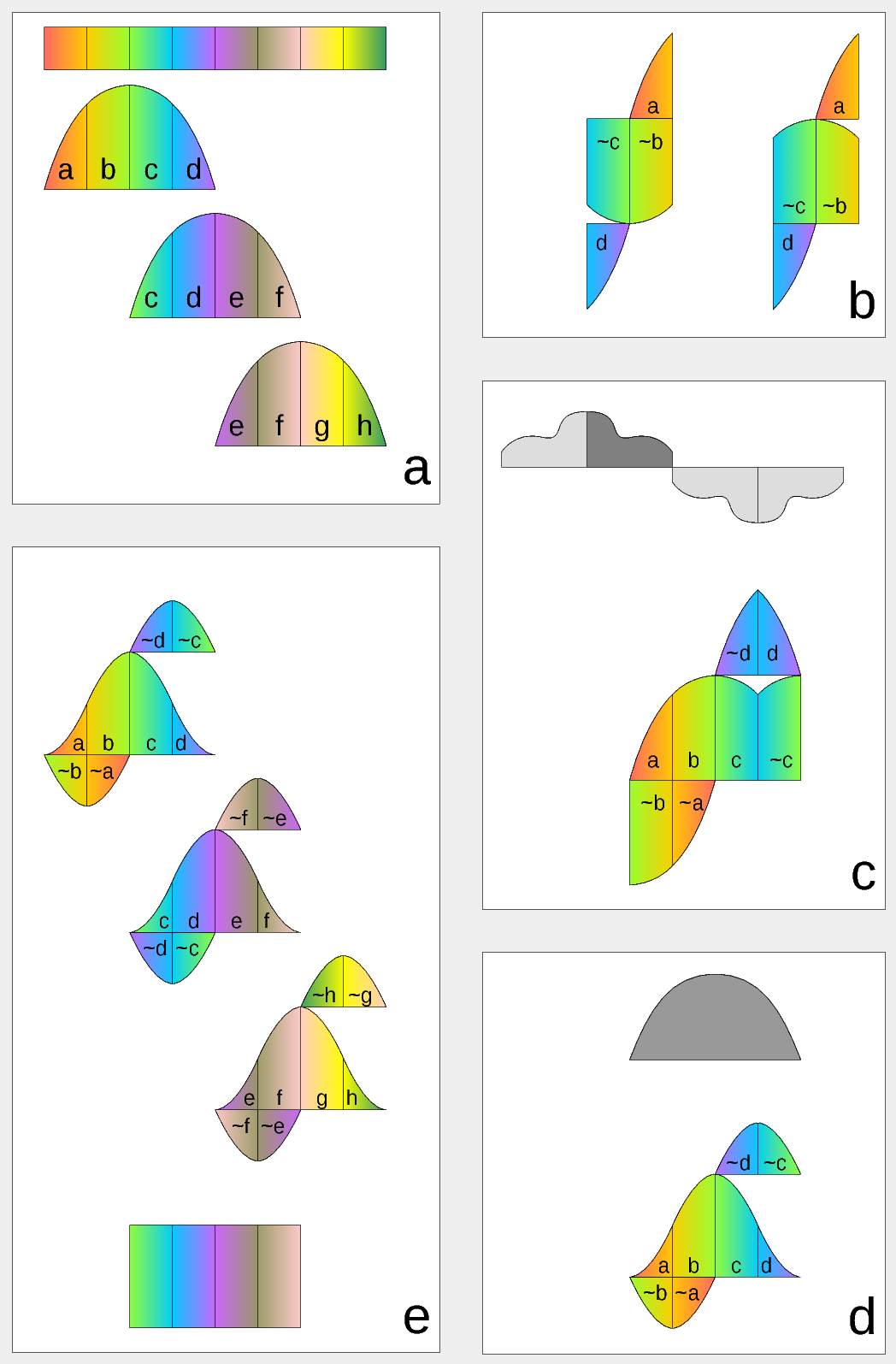

Figure 1. Complex Lapped Transform with DCT-IV/DST-IV: time-domain aliasing cancellation (TDAC) property. a) selection of overlapping input subsequences 2*N-long, multiplication by sine window; b) creating N-long sequences for DCT-IV (left) and DST-IV (right); c) (after frequency domain processing) extending N-long sequence using DCT-IV boundary conditions (DST-IV processing is similar); d) second multiplication by sine window; e) combining partial data

There is another issue related to the space-variant kernels. Fractional pixel shifts are required for multiple steps of the processing: aberration correction (obvious in the case of the lateral chromatic aberration), image rectification before matching that accounts for lens optical distortion, camera orientation mismatch and epipolar geometry transformations. Traditionally it is handled by the image rectification that involves re-sampling of the pixel values for a new grid using some type of the interpolation. This process distorts the signal data and introduces non-linear errors that reduce accuracy of the correlation, that is important for subpixel disparity measurements. Our approach completely eliminates resampling and combines integer pixel shift in the pixel domain and delegates the residual fractional pixel shift (±0.5 pix) to the FD, where it is implemented as a cosine/sine phase rotator. Multiple sources of the required pixel shift are combined for each tile, and then a single phase rotation is performed as a last step of pixel domain to FD conversion.

Frequency Domain Conversion with the Modulated Complex Lapped Transform

Modulated Complex Lapped Transform (MCLT)[3] can be used to split input sequence into overlapping fractions, processed separately and then recombined without block artifacts. Popular application is the signal compression where “processed separately” means compressed by the encoder (may be lossy) and then reconstructed by the decoder. MCLT is similar to the MDCT that is implemented with DCT-IV, but it additionally preserves and allows frequency domain modification of the signal phase. This feature is required for our application (fractional pixel shifts and asymmetrical lens aberrations modify phase), and MCLT includes both MDCT and MDST (that use DCT-IV and DST-IV respectively). For the image processing (2d conversion) four sub-transforms are needed:

- horizontal DCT-IV followed by vertical DCT-IV

- horizontal DST-IV followed by vertical DCT-IV

- horizontal DCT-IV followed by vertical DST-IV

- horizontal DST-IV followed by vertical DST-IV

(more…)

December 20, 2017

by Oleg Dzhimiev

We have updated the Yocto build system to Poky Rocko released back in October. Here’s a short summary table of the updates:

|

before |

after |

| Poky |

2.0 (Jethro) |

2.4 (Rocko) |

| gcc |

5.3.0 |

7.2.0 |

| linux kernel |

4.0 |

4.9 |

Other packages got updates as well:

- apache2-2.4.18 => apache2-2.4.29

- php-5.6.16 => php-5.6.31

- udev-182 changed to eudev-3.2.2, etc.

This new version is in the

rocko branch for now but will be merged into

master after some transition period (and the current master will be moved to jethro branch). Below are a few tips for future updates.

(more…)

December 13, 2017

by Olga Filippova

MNC393-XCAM partial assembly and parts

The long anticipated parts for the Long range camera have arrived!

The mechanical parts for the MNC393-XCAM –

Long Range Multi-view Stereo Camera are machined, tested, and ready to be anodized. This enables us to have the X-camera assembled before the winter holidays. The holiday break will provide a good opportunity to test the camera, capture new photos, and create robust 3D models from calibrated images.

The titanium X-frame of the camera ensures thermal stability required for continuous accuracy of 3D measurements. The aluminum enclosure and sealed lens filters weatherproof the system allowing for the proposed outdoor use of the camera.

We intend to assemble two cameras: one with a 150 mm distance between the sensors and another with a longer baseline. The expected accuracy for the camera with the shorter baseline is greater than 10% at a 200 meter distance. We have achieved 10% accuracy with H-camera with calibrated sensors, even though the 3D-printed parts were not thermally stable and some error was accumulated over time. It was a very pleasant surprise that the software was still able to deal with somewhat un-calibrated images and detect distances very accurately, creating impressive 3D-scenes:

Scene_viewer The second camera will have a 280 mm distance between sensors, which is determined by the longest FPC cables we can use without signal losses. It promises to double the measured distance with the same degree of accuracy, therefore an extremely long range 3D-scenes will be produced.

The Long Range Multi-View Stereo Camera with 4 sensors MNC393-XCAM is planned for release in early 2018.

« Previous Page —

Next Page »