June 9, 2015

by Andrey Filippov

Quick update: a new chunk of code is added to the NC393 camera FPGA project. It is a second (of three needed to match the existing NC353 functionality) major parts of the system after the memory controller is finished. This code is just written, it still has to be verified by the simulation first, and then by synthesizing and by running it on the actual hardware. We plan to do that when the third part – image compressors will be ported to the new system too. The added code deals with receiving data from the image sensors and pre-processing it before storing in the video memory. FPGA-based systems are very flexible and many other configurations like support of multi-lane serial interface sensors or using several camera ports to connect a single large high-speed sensor are possible and will be implemented later. The table below summarizes parameters of the current code only.

Table 1. NC393 Sensor Connections and Pre-processing

| Feature |

Value |

|---|

| Number of sensor ports |

4 |

| Total number of multiplexed sensors |

16 |

| Total number of multiplexed sensors with existing 10359 multiplexer board |

12 |

| Sensor interface type (implemented in HDL) |

parallel 12 bits |

| Sensor interface hardware compatibility |

parallel LVCMOS/serial differential 8 lanes + clock |

| Sensor interface voltage levels |

programmable up to 3.3V |

| Number of I²C sequencers |

4 (1 per port) |

| Number of I²C sequencers frames |

16 |

| Number of I²C sequencers commands per frame |

64 |

| I²C sequencers commands data width |

16/8 bits |

| Image data width stored |

16/8 bits per pixel |

| Gamma conversion regions per port |

4 |

| Histograms: number of rectangular ROI (Regions of Interest) per port |

4 |

| Histograms: number of color channels |

4 |

| Histograms: number of bins per color |

256 |

| Histograms: width per bin |

18 or 32 bits |

| Histograms: number of histograms stored per sensor |

16 |

May 9, 2015

by Andrey Filippov

Development of the NC393 camera has just passed an important milestone – we completed HDL code that constitutes the core of this new camera, tested most of the Zynq-specific features that were not available in the older Spartan-3 FPGA used in our current NC353 devices. Next development phase will involve porting some of the existing code that deals with sensor interfacing, gamma correction, histograms, color conversion and JPEG/JP4 compression – code that was tested in the thousands of cameras and many billions of processed images, including the applications listed in Wikipedia. New camera is designed primarily for the multisensor applications – up to four connected directly to the system board and more through the multiplexers as we currently do in Eyesis4π cameras. It is the memory controller that had to be redesigned completely, the sensor and compressor channels can reuse most of the existing code and just have 4 instances of the same modules instead of a single one. Starting early this year I’ve got an opportunity to put aside other projects and work full time on the new camera code.

(more…)

April 24, 2015

by Andrey Filippov

Working with the DDR3 Memory interface I was not able to avoid the temptation to investigate more the very useful feature of the modern FPGA devices – individually programmed input/output delay elements on all (or at least many) of its pins. This is needed to both prepare to increase the memory clock frequency and to be able to individually adjust the timing on other pads, such as the sensor ports, especially when switching from the parallel to high speed serial interface of the modern image sensors.

Xilinx Zynq device we are using has both input and output delays on all low-voltage pins used for the memory interface in the camera, but only input ones on the higher voltage range I/O banks. Luckily enough image sensors connected to these banks need just that – data rate to the sensors is much lower than the rate of the data they generate and send to the FPGA.

(more…)

April 23, 2015

by Yuri Nenakhov

Updates: see https://blog.elphel.com/2017/11/developing-with-eclipse-cdt-and-yocto-linux-kernel-and-applications/

Eclipse with C Development Tool (CDT) is a very powerful and feature-rich IDE for developing embedded Linux applications, such as Elphel393 camera. CDT includes CODAN — static code analysis tool which helps user to track possible problems in his code without compiling it, and Code Indexer, giving an auto-complete and code navigating (F3) features. They work independently from compiler, thus parsing the code in the same manner as compiler does is essential for producing meaningful results. As project grows, the interconnections between its parts tend to become more and more complicated, and maintaining the congruency of code processing for compiler and CODAN/Code Indexer becomes a non-trivial task. In the Internet, the most frequent recommendation for users who wish to develop Linux kernel with Eclipse is to disable CODAN feature since messy false error markers make it practically unusable. The situation becomes even worse for developers using external build tools (such as OpenEmbedded’s BitBake) as CODAN relies on output of a CDT-integrated build system to find correct way of code parsing. Anyway, embedded Linux applications usually involve kernel development, so we’ll try to find a practical approach to get the power of CODAN and Code Indexer into our hands.

(more…)

April 3, 2015

by Mikhail Karpenko

Introduction

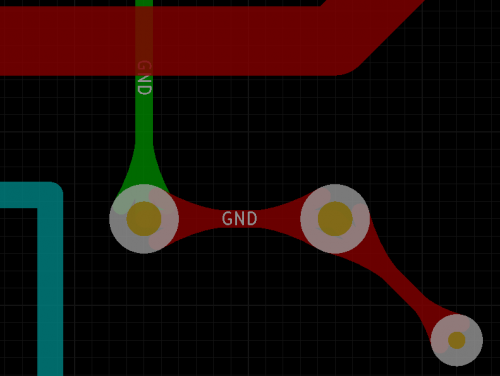

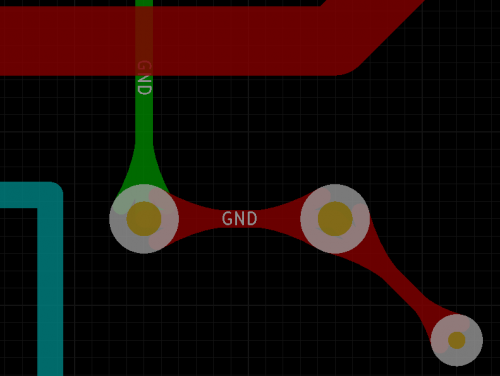

Teardrops in KiCAD

We, at Elphel, are currently using proprietary software for schematic and PCB development and thus are not able to provide our customers with the “real” source files of our designs – pdf and gerber files only. Being free software and open hardware oriented company we would like to replace this software with open source analogues but were not able to accomplish this due to various limitations and inconveniences in design work-flow. We follow the progress in such projects as gEDA and KiCAD and made another attempt to use one them in our work. KiCAD seems to be the most promising design suite considering recent CERN contribution and active community support. I tried to design a simple element, a flexible printed circuit cable, using KiCAD and found out that the PCB design program lacks such useful feature as teardrops.

(more…)

September 10, 2014

by Andrey Filippov

We just tested two samples of Evetar N123B05425W lens that is very similar to Sunex DSL945D described in the previous post.

Lens Specifications

|

Sunex DSL945D |

Evetar N123B05425W |

|---|

| Focal length |

5.5mm |

5.4mm |

| F# |

1/2.5 |

1/2.5 |

| IR cutoff filter |

yes |

yes |

| Lens mount |

M12 |

M12 |

| image format |

1/2.3 |

1/2.3 |

| Recommended sensor resolution |

10Mpix |

10MPix |

July 26, 2014

by Andrey Filippov

We were measuring lens performance since we’ve got involved in the optical issues of the camera design. There are several blog posts about it starting with "Elphel Eyesis camera optics and lens focus adjustment". Since then we improved methods of measuring Point Spread Function (PSF) of the lenses over the full field of view using the target pattern modified from the standard checkerboard type have better spatial frequency coverage. Now we use a large (3m x 7m) pattern for the lens testing, sensor front end (SFE) alignment, camera distortion calibration and aberration measurement/correction for Eyesis series cameras.

Fig.1 PSF measured over the sensor FOV – composite image of the individual 32×32 pixel kernels

So far lens testing was performed for just two purposes – select the best quality lenses (we use approximately half of the lenses we receive) and to precisely adjust the sensor position and tilt to achieve the best resolution over the full field of view. It was sufficient for our purposes, but as we are now involved in the custom lens design it became more important to process the raw PSF data and convert it to lens parameters that we can compare against the simulated achieved during the lens design process. Such technology will also help us to fine-tune the new lens design requirements and optimization goals.

The starting point was the set of the PSF arrays calculated using images acquired from the the pattern while scanning over the range of distances from the lens to the sensor in small increments as illustrated on the animated GIF image Fig.1. The sensor surface was not aligned to be perpendicular to the optical axis of the lens before the measurement -each lens and even sensor chip has slight variations of the tilt and it is dealt with during processing of the data (and during the final alignment of the sensor during production, of course). The PSF measurement based on the repetitive pattern gives sub-pixel resolution (1.1μm in our case with 2.2μm Bayer mosaic pixel period – 4:1 up-sampled for red and blue in each direction), but there is a limit on the PSF width that the particular setup can handle. Too far out-of-focus and the pattern can not be reliably detected. That causes some artifacts on the animations made of the raw data, these PSF samples are filtered during further processing. In the end we are interested in lens performance when it is almost in perfect focus, so scanning too far away does not provide much of the practical value anyway.

(more…)

July 23, 2014

by Oleg Dzhimiev

Description

Running OSLO’s optimization has shown that having a single operand defined is probably not enough. During the optimization run the program computes the derivative matrix for the operands and solves the least squares normal equations. The iterations are repeated with various values of the damping factor in order to determine the optimal value of the damping factor.

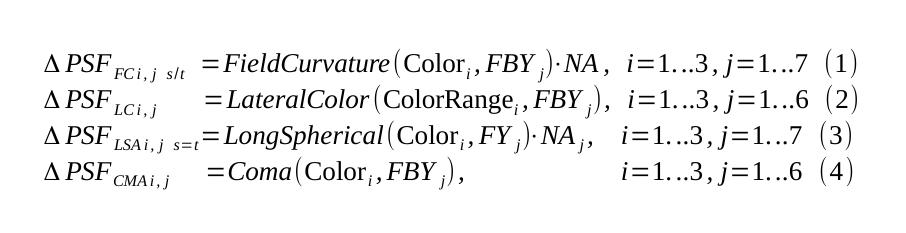

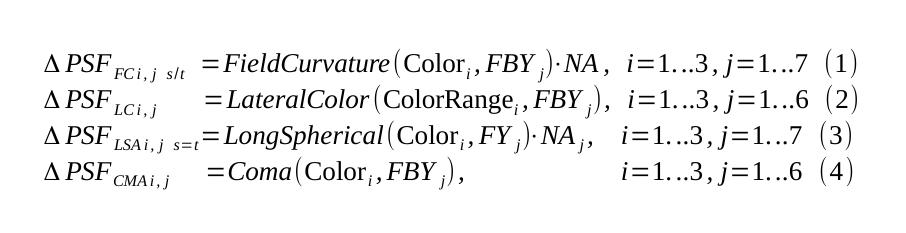

So, extra operands were added to split the initial error function – each new operand’s value is a contribution to the spot size (blurring) calculated for each color, aberration and certain image heights. See Fig.1 for formulas.

Fig.1 Extra Operands

July 1, 2014

by Oleg Dzhimiev

Description

The Error Function calculates the 4th root of the average of the 4th power spot sizes over several angles of the field of view.

(more…)

June 30, 2014

by Oleg Dzhimiev

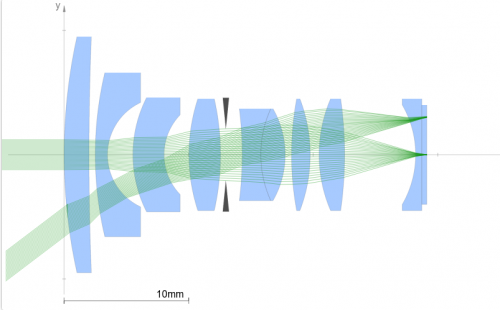

Elphel has embarked on a new project, somewhat different from our main field of designing digital cameras, but closely related to the camera applications and aimed to further improve image quality of Eyesis4π camera.

Eyesis4π is a high resolution full-sphere panoramic and stereophotogrammetric camera. It is a tiled multi-sensor system with a single sensor’s format of 1/2.5″. The specific requirement of such system is uniform angular resolution, since there is no center in a panoramic image.

(more…)

« Previous Page —

Next Page »