June 5, 2013

by Andrey Filippov

Fig.1. Elphel new calibration pattern

Elphel has moved to a new calibration facility in May 2013. The new office is designed with the calibration room being it’s most important space, expandable when needed to the size of the whole office with the use of wide garage door. Back wall in the new calibration room is covered with the large, 7m x 3m pattern, illuminated with bright fluorescent lights. The length of the room allows to position the calibration machine 7.5 meters away from the pattern. The long space and large pattern will allow to calibrate Eyesis4π positioned far enough from the pattern to be withing depth of field of its lenses focused for infinity, while still keeping wide angular size, preferred for accuracy of measurements.

We already hit the precision limits using the previous, smaller pattern 2.7m x 3.0m. While the software was designed to accommodate for the pattern where each of the nodes had to have individually corrected position (from the flat uniform grid), the process assumed that the 3d coordinates of the nodes do not change between measurements.

(more…)

October 25, 2012

by Andrey Filippov

“Temporary diversion” that lasted for three years

Last years we were working on the multi-sensor cameras and optical parts of the cameras. It all started as a temporary diversion from the development of the model 373 cameras that we planned to use instead of our current model 353 cameras based on the discontinued Axis CPU. The problem with the 373 design was that while the prototype was assembled and successfully tested (together with two new add-on boards) I did not like the bandwidth between the FPGA and the CPU – even as I used as many connection channels between them as possible. So while the Texas Instruments DaVinci processor was a significant upgrade to the camera CPU power, the camera design did not seem to me as being able to stay current for the next 3-5 years and being able to accommodate new emerging (not yet available) sensors with increased resolution and frame rate. This is why we decided to put that design on hold being ready to start the production if our the number of our stored Axis CPU would fall dangerously low. Meanwhile wait for the better CPU/FPGA integration options to appear and focus on the development of the other parts of the system that are really important.

Last years we were working on the multi-sensor cameras and optical parts of the cameras. It all started as a temporary diversion from the development of the model 373 cameras that we planned to use instead of our current model 353 cameras based on the discontinued Axis CPU. The problem with the 373 design was that while the prototype was assembled and successfully tested (together with two new add-on boards) I did not like the bandwidth between the FPGA and the CPU – even as I used as many connection channels between them as possible. So while the Texas Instruments DaVinci processor was a significant upgrade to the camera CPU power, the camera design did not seem to me as being able to stay current for the next 3-5 years and being able to accommodate new emerging (not yet available) sensors with increased resolution and frame rate. This is why we decided to put that design on hold being ready to start the production if our the number of our stored Axis CPU would fall dangerously low. Meanwhile wait for the better CPU/FPGA integration options to appear and focus on the development of the other parts of the system that are really important.

Now that wait for the processor is nearly over and it seems to be just in time – we still have enough stock to be able to provide NC353 cameras until the replacement will be ready. I’ll get to this later in the post, and first tell where did we get during these 3 years.

(more…)

September 24, 2012

by Andrey Filippov

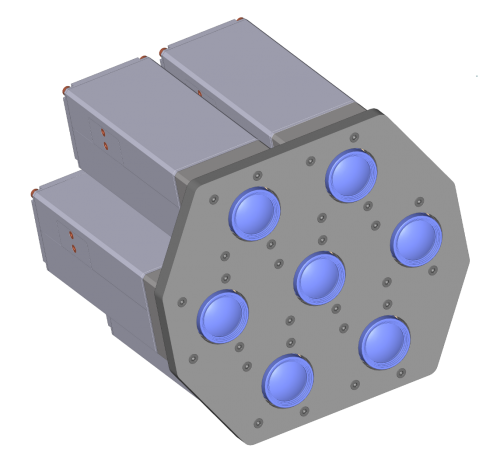

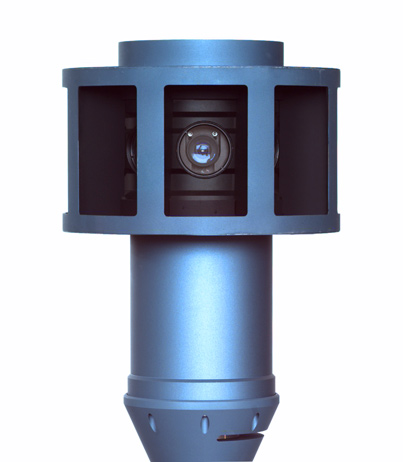

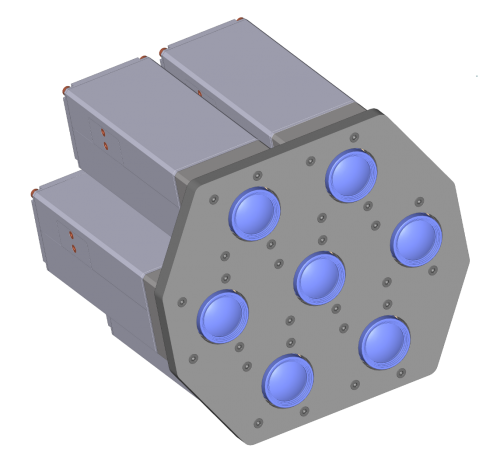

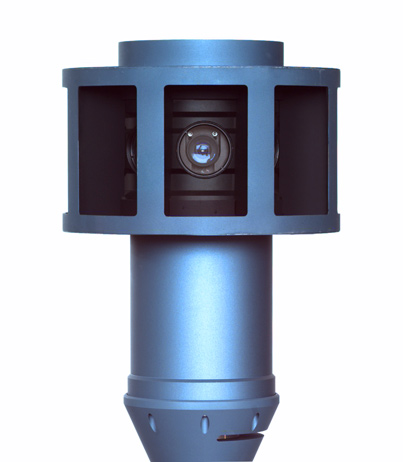

This is a long overdue post describing our work on the Eyesis4π camera, an attempt to catch up with the developments of the last half of a year. The design of the camera started a year before that and I described the planned changes from the previous model in Eyesis4πi post. Oleg wrote about the assembly progress and since that post we did not post any updates.

(more…)

October 31, 2011

by Andrey Filippov

Motivation

While working on the second generation of the Eyesis panoramic cameras, we decided to try go from capturing the series of the individual panoramic images to the 3d reconstruction. There are multiple successful implementations of such process, we just plan to achieve higher precision of capturing the 3d worlds using Elphel ability to design and build the hardware specific for such purpose. While most projects are designed to work with the standard off-the-shelf cameras, we are working on building the cameras together with the devices and methods for these cameras calibration. In order to be able to precisely determine the 3-d locations of the features registered with the cameras we plan first go as far as possible to precisely map each pixel of each sub-camera (of the composite camera) image to the ray in space. That would require at least two distinctive steps:

(more…)

June 15, 2011

by Andrey Filippov

WebGL Panorama Editor (view mode)

This April we attached Eyesis camera to a backpack and took it to the Southern Utah. Unfortunately I did not finish the IMU hardware then so we could rely only on a GPS for tagging the imagery. GPS alone can work when the camera is on the car, but with a camera moving at pedestrian speed (images were taken 1-1.5 meters apart) it was insufficient even in the open areas with a clear view of the sky. Additionally, camera orientation was changing much more than when it is attached to a car that moves (normally) in the direction the wheels can roll. Before moving forward with the IMU data processing we decided to try to orient/place some of the imagery we took manually – just by looking through the panoramas, adjusting the camera heading/tilt/roll to make them look level and oriented so the next camera location matches the map. Just tweaking the KML file with the text editor seemed impractical to adjust hundreds of panoramic images so we decided to add more functionality to our simple WebGL panorama viewer, make it suitable for both walking through the panorama sets and for the editing orientations and locations of the camera spots. (more…)

May 19, 2011

by Andrey Filippov

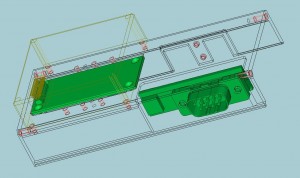

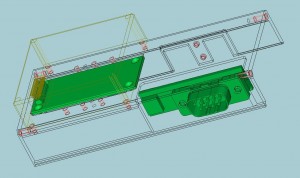

CAD rendering of the Analog Devices ADIS-16375 Inertial Sensor, 103695 interface board and 103696 serial GPS adapter board attached to the top cover of NC353L camera

For almost 3 years we had a possibility to geo-tag the images and video using external GPS and optional accelerometer/compass module that can be mounted inside the camera. Information from the both sensors is included in the Exif headers of the images and video frames. The raw magnetometer and accelerometer data stored at the image frame rate has limited value, it needs to be sampled and processed at high rate to be useful for the orientation tracking , and for tracking position it has to be combined with the GPS measurements.

We had developed the software to receive positional data from either Garmin GPS18x (that can be attached directly to the USB port of the camera) or a standard NMEA 0183 compatible device using USB-to-serial adapter. In the last case it may need a separate power supply or a special (or modified) USB adapter that can provide power to the GPS unit from the USB bus. (more…)

March 14, 2011

by Andrey Filippov

Last week our phone system was broken into and we’ve got a phone bill for some five hundred dollars for the calls to Gambia. That expense was not terrible, but still that amount is usually enough for many months of the phone service for our small company – the international phone rates in the VoIP era are (for the destinations we use) are really low. The scary thing was that the attack lasted for very short time – just minutes, not hours, so our damage could be significantly higher. (more…)

February 28, 2011

by Andrey Filippov

Current state of the Eyesis project,

what worked and what did not. Or worked not as good as we would like it to

Most of the last year we spent developing Eyesis panoramic cameras, designing and then assembling the hardware, working on the software for image acquisition and processing. It was and continues to be a very interesting project, we had to confront multiple technical challenges, come up with the solutions we never tried before at Elphel – many of these efforts are documented in this blog.

We had built and shipped to the customers several Eyesis cameras, leaving one for ourselves, so we can use it for development of the new code and testing performance of the camera as a whole and the individual components. Most things worked as we wanted them to, but after building and operating the first revision of Eyesis we understood that some parts should be made differently.

(more…)

January 3, 2011

by Andrey Filippov

View the results

We had nice New Year vacations (but so short, unfortunately) at Maple Grove Hot Springs, and between soaking in the nice hot pools I tried the emerging technology I never dealt with before – WebGL, a part of HTML-5 standard that gives you the power of the graphic cards 3-d capability in the browser, programed (mostly) in familiar javaScript. I first searched for the existent panoramas to see how they look like and how responsive they are to the mouse controls, but could not immediately find something working (at least on Firefox 4.0b8 that I just installed – you’ll need to enable “webgl.enabled_for_all_sites” in “about:config” if you would like to try it too). Then I found a nice tutorial with the examples working without a glitch and carefully read the first few lessons.

We had nice New Year vacations (but so short, unfortunately) at Maple Grove Hot Springs, and between soaking in the nice hot pools I tried the emerging technology I never dealt with before – WebGL, a part of HTML-5 standard that gives you the power of the graphic cards 3-d capability in the browser, programed (mostly) in familiar javaScript. I first searched for the existent panoramas to see how they look like and how responsive they are to the mouse controls, but could not immediately find something working (at least on Firefox 4.0b8 that I just installed – you’ll need to enable “webgl.enabled_for_all_sites” in “about:config” if you would like to try it too). Then I found a nice tutorial with the examples working without a glitch and carefully read the first few lessons.

(more…)

December 21, 2010

by Andrey Filippov

UPDATE: The latest version of the page for comparing the results.

This is a quick update to the Zoom in. Now… enhance. – a practical implementation of the aberration measurement and correction in a digital camera post published last month. It had many illustrations of the image post-processing steps, but lacked the most important the real-life examples of the processed images. At that time we just did not have such images, we also had to find out a way to acquire calibration images at the distance that can be considered “infinity” for the lenses – the first images used a shorter distance of just 2.25m between the camera and the target, the target size was limited by the size of our office wall. Since that we improved software combining of the partial calibration images, software was converted to multi-threaded to increase performance (using all the 8 threads in the 4-core Intel i7 CPU resulted in approximately 5.5 times faster processing) and we were able to calibrate the two actual Elphel Eyesis cameras (only 8 lenses around, top fisheye is not done yet). It was possible to apply recent calibration data (here is a set of calibration files for one of the 8 channels) to the images we acquired before the software was finished. (more…)

This is a quick update to the Zoom in. Now… enhance. – a practical implementation of the aberration measurement and correction in a digital camera post published last month. It had many illustrations of the image post-processing steps, but lacked the most important the real-life examples of the processed images. At that time we just did not have such images, we also had to find out a way to acquire calibration images at the distance that can be considered “infinity” for the lenses – the first images used a shorter distance of just 2.25m between the camera and the target, the target size was limited by the size of our office wall. Since that we improved software combining of the partial calibration images, software was converted to multi-threaded to increase performance (using all the 8 threads in the 4-core Intel i7 CPU resulted in approximately 5.5 times faster processing) and we were able to calibrate the two actual Elphel Eyesis cameras (only 8 lenses around, top fisheye is not done yet). It was possible to apply recent calibration data (here is a set of calibration files for one of the 8 channels) to the images we acquired before the software was finished. (more…)

« Previous Page —

Next Page »