by Olga Filippova

It has been a while since we wrote on the Elphel Development Blog. However, it doesn’t mean we haven’t been developing new technologies and applications. In fact, there is so much new that I would want to write a post for each new project once it is possible, i.e., after Ukraine wins the war.

It has been a while since we wrote on the Elphel Development Blog. However, it doesn’t mean we haven’t been developing new technologies and applications. In fact, there is so much new that I would want to write a post for each new project once it is possible, i.e., after Ukraine wins the war.

From the beginning of the war, Elphel, Inc. has supported Ukraine against the Russian invasion. We temporarily (until the Victory) painted the Elphel icon in blue and yellow colors of the Ukrainian flag to express our support. In May 2023, Elphel went to Ukraine as part of a Utah Humanitarian and Defense delegation led by the President of the Utah Senate, Mr. Stuart Adams. We represented defense and aerospace companies from our state. During a meeting with the Deputy Minister of the Economy of Ukraine, Mr. Fomenko, he emphasized the country’s priorities in demining for agriculture. Ukraine currently lacks the technology necessary to demine fields in a reasonable amount of time.

This meeting and an idea from Ukrainian engineers at Midgard Dynamics to use the high sensitivity of the Elphel LWIR-16 camera led to developing a game-changing mine-detection system for humanitarian and agricultural needs.

Elphel tested the prototype and presented the results to the scientists from V.M. Glushkov Institute of Cybernetics of NAS of Ukraine. We are currently working with European partners to integrate Elphel technology with humanitarian demining efforts in Ukraine and other countries around the world.

Search and Rescue is another application of this technology.

Here is a link to my recent statement on LinkedIn.

by Andrey Filippov and Fyodor Filippov

Advance Steel 2024: https://abcoemstore.com/product/autodesk-advance-steel-2024/ System Requirements Learn about the system requirements for configuring Advance Steel.

Download links for: video and captions.

This research “Long-Range Thermal 3D Perception in Low Contrast Environments” is funded by NASA contract 80NSSC21C0175

by Andrey Filippov

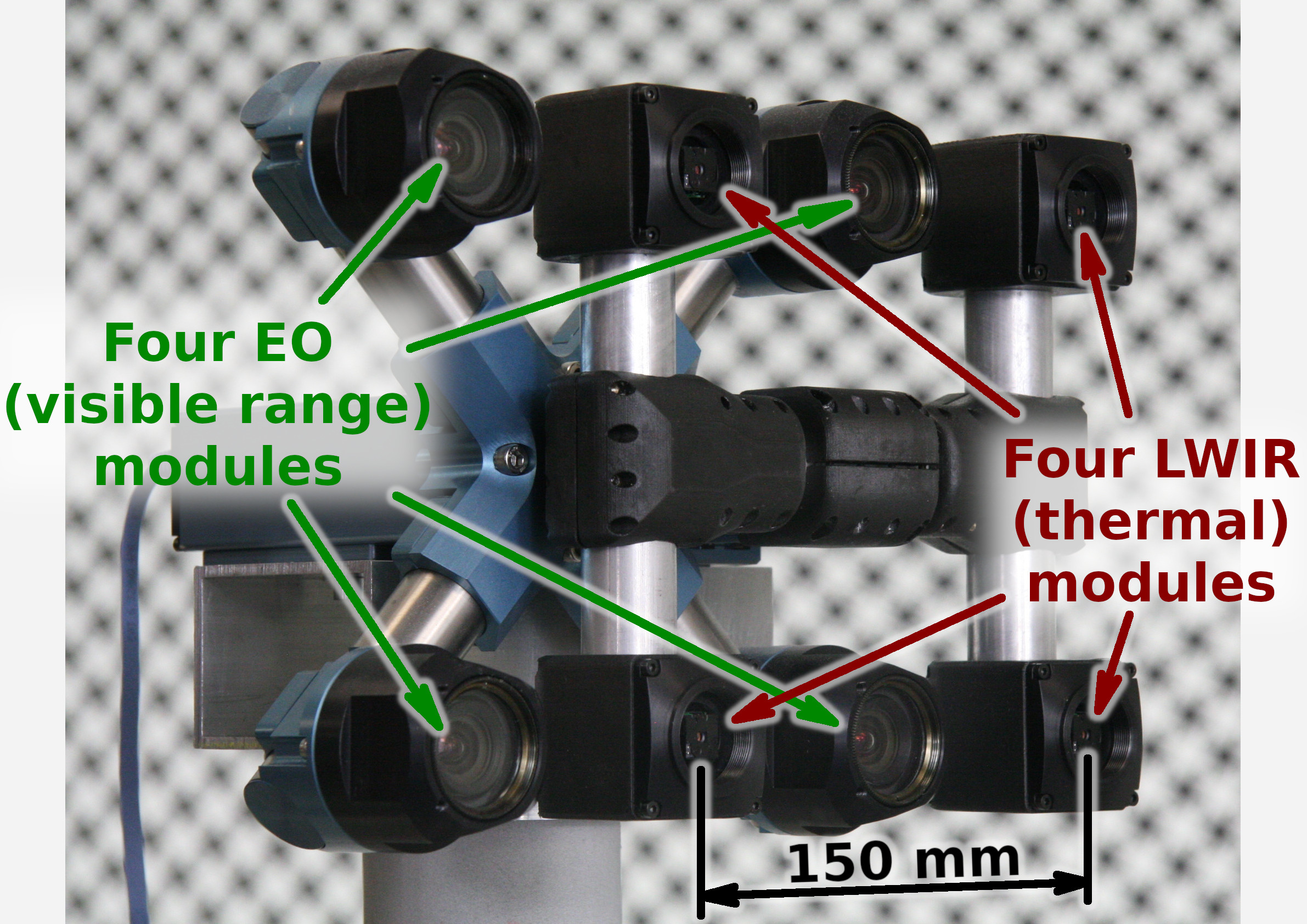

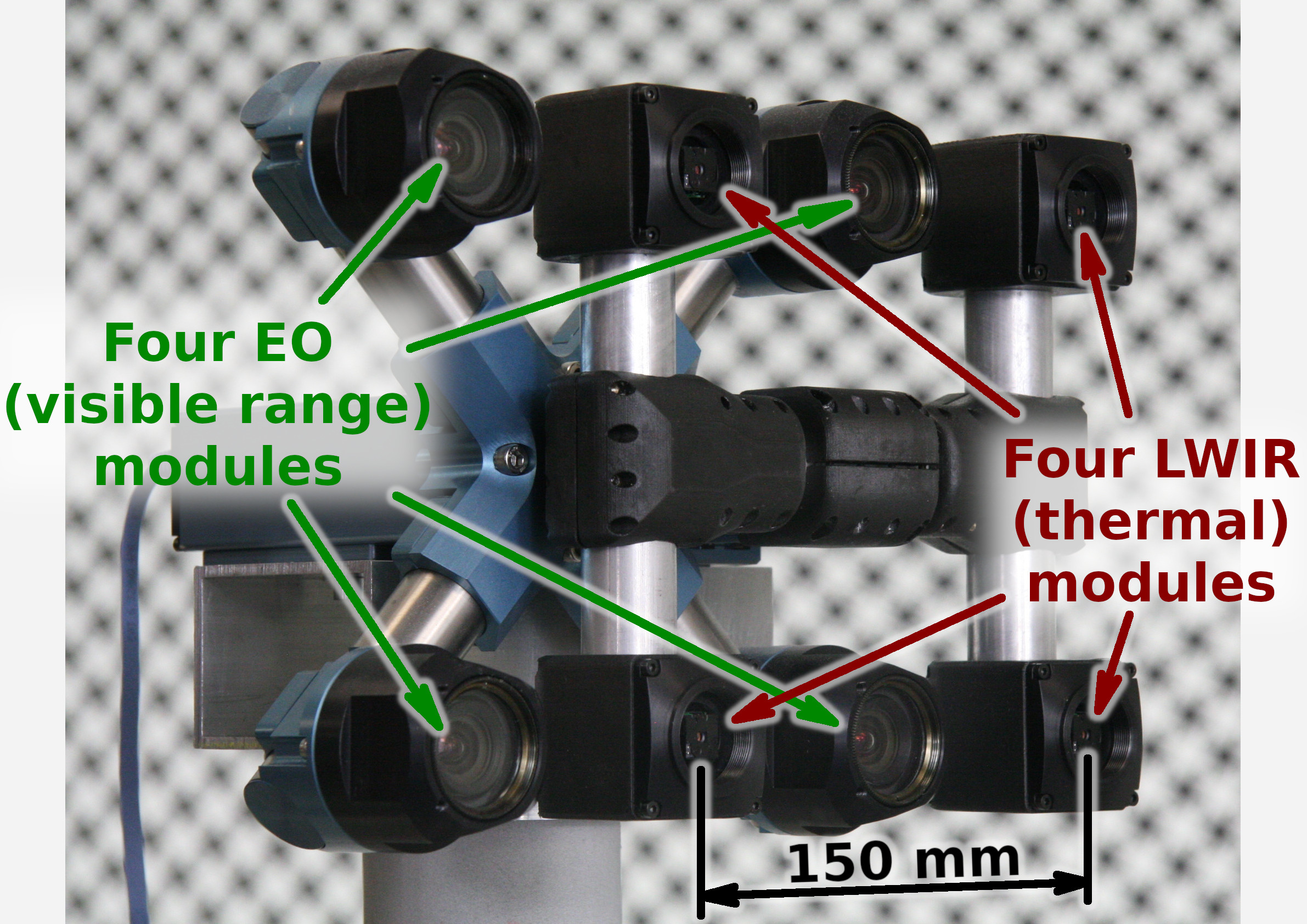

Figure 1. Talon (“instructor/student”) test camera.

Update: arXiv:1911.06975 paper about this project.

Summary

This post concludes the series of 3 publications dedicated to the progress of Elphel five-month project funded by a SBIR contract.

After developing and building the prototype camera shown in Figure 1, constructing the pattern for photogrammetric calibration of the thermal cameras (post1), updating the calibration software and calibrating the camera (post2) we recorded camera image sets and processed them offline to evaluate the result depth maps.

The four of the 5MPix visible range camera modules have over 14 times higher resolution than the Long Wavelength Infrared (LWIR) modules and we used the high resolution depth map as a ground truth for the LWIR modules.

Without machine learning (ML) we received average disparity error of 0.15 pix, trained Deep Neural Network (DNN) reduced the error to 0.077 pix (in both cases errors were calculated after removing 10% outliers, primarily caused by ambiguity on the borders between the foreground and background objects), Table 1 lists this data and provides links to the individual scene results.

For the 160×120 LWIR sensor resolution, 56° horizontal field of view (HFOV) and 150 mm baseline, disparity of one pixel corresponds to 21.4 meters. That means that at 27.8 meters this prototype camera distance error is 10%, proportionally lower for closer ranges. Use of the higher resolution sensors will scale these results – 640×480 and longer baseline of 200 mm (instead of the current 150 mm) will yield 10% accuracy at 150 meters, 56°HFOV.

(more…)

by Andrey Filippov

Figure 1. Calibration of the quad visible range + quad LWIR camera.

We’ve got the first results of the photogrammetric calibration of the composite (visible+LWIR) Talon camera described int the previous post and tested the new pattern. In the first experiments with the new software code we’ve got average reprojection error for LWIR of 0.067 pix, while the visible quad camera subsystem was calibrated down to 0.036 pix. In the process of calibration we acquired 3 sequences of 8-frame (4 visible + 4 LWIR) image sets, 60 sets from each of the 3 camera positions: 7.4m from the target on the target center line, and 2 side views from 4.5m from the target and 2.2 m right and left from the center line. From each position camera axis was scanned in the range of ±40° horizontally and ±25° vertically.

(more…)

by Andrey Filippov

Figure 1. Oleg carrying combined LWIR and visible range camera.

While working on extremely long range 3D visible range cameras we realized that the passive nature of such 3D reconstruction that does not involve flashing lasers or LEDs like LIDARs and Time-of-Flight (ToF) cameras can be especially useful for thermal (a.k.a LWIR – long wave infrared) vision applications. SBIR contract granted at the AF Pitch Day provided initial funding for our research in this area and made it possible.

We are now in the middle of the project and there is a lot of the development ahead, but we have already tested all the hardware and modified our earlier code to capture and detect calibration pattern in the acquired images.

(more…)

by Andrey Filippov

After we coupled the Tile Processor (TP) that performs quad camera image conditioning and produces 2D phase correlation in space-invariant form with the neural network[1], the TP remained the bottleneck of the tandem. While the inferred network uses GPU and produces disparity output in 0.5 sec (more than 80% of this time is used for the data transfer), the TP required tens of seconds to run on CPU as a multithreaded Java application. When converted to run on the GPU, similar operation takes just 0.087 seconds for four 5 MPix images, and it is likely possible to optimize the code farther — this is our first experience with Nvidia® CUDA™.

(more…)

by Andrey Filippov

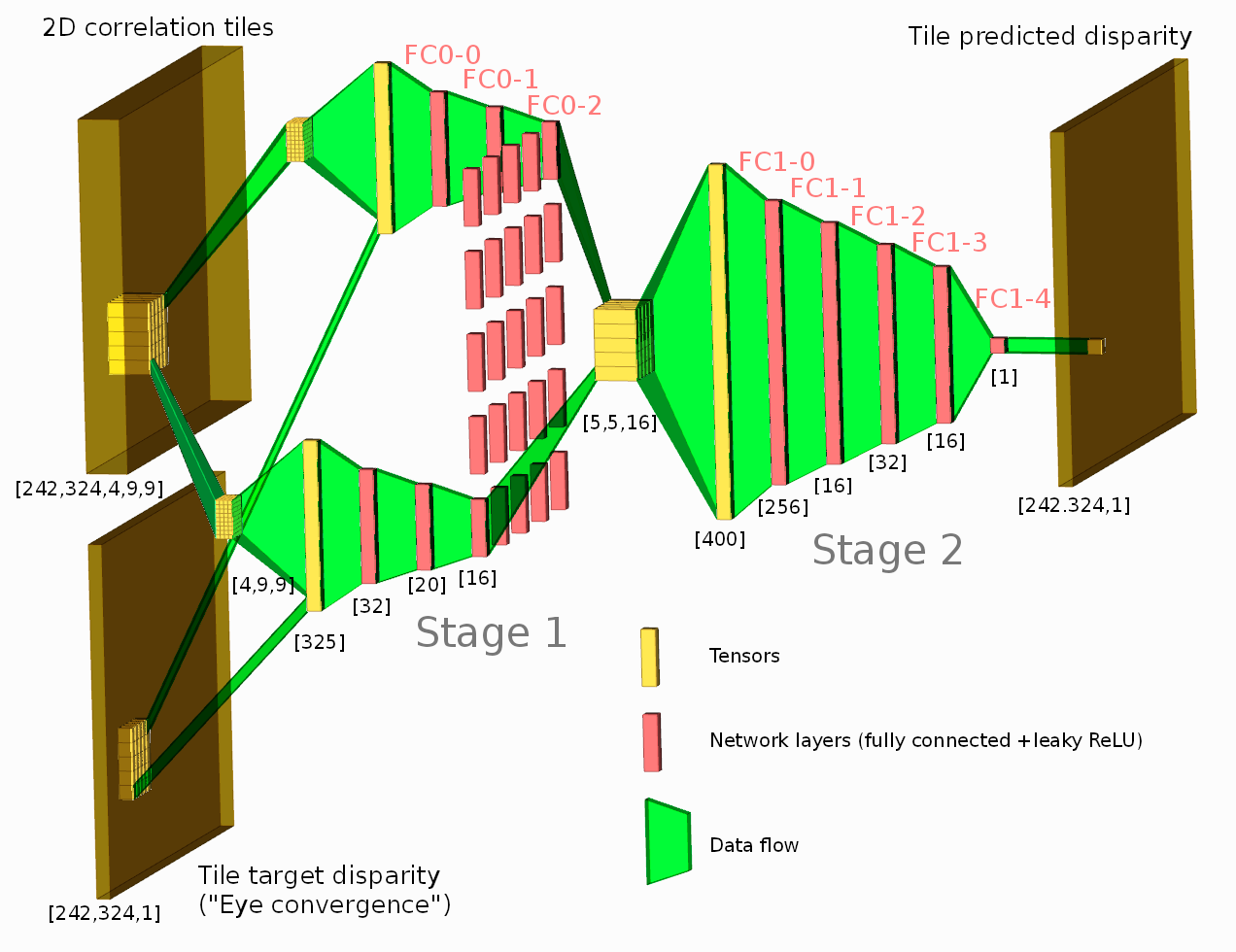

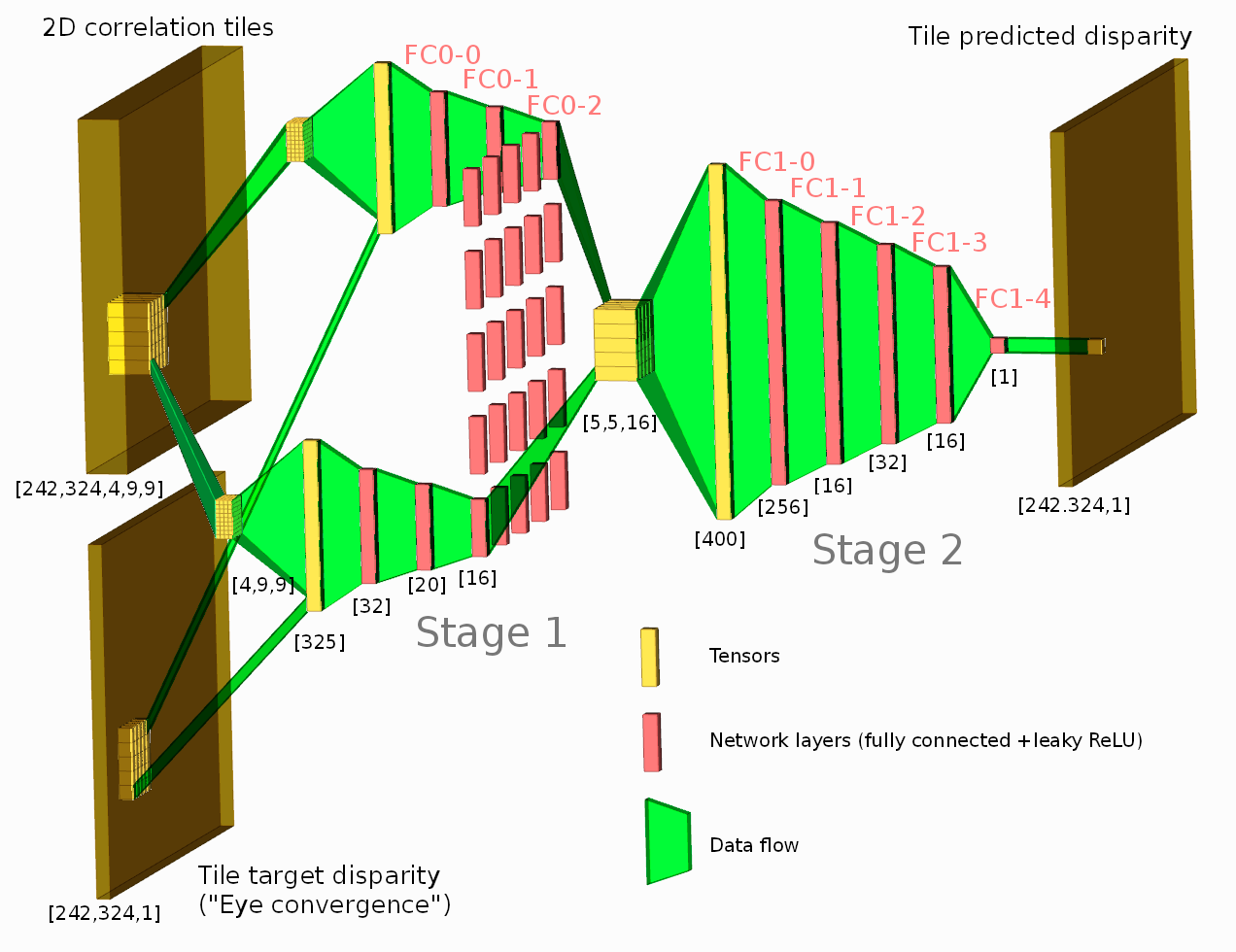

Figure 1. Network diagram. One of the tested configurations is shown.

Neural network connected to the output of the Tile Processor (TP) reduced the disparity error twice from the previously used heuristic algorithms. The TP corrects optical aberrations of the high resolution stereo images, rectifies images, and provides 2D correlation outputs that are space-invariant and so can be efficiently processed with the neural network.

What is unique in this project compared to other ML applications for image-based 3D reconstruction is that we deal with extremely long ranges (and still wide field of view), the disparity error reduction means 0.075 pix standard deviation down from 0.15 pix for 5 MPix images.

See also: arXiv:1811.08032

(more…)

It has been a while since we wrote on the Elphel Development Blog. However, it doesn’t mean we haven’t been developing new technologies and applications. In fact, there is so much new that I would want to write a post for each new project once it is possible, i.e., after Ukraine wins the war.

It has been a while since we wrote on the Elphel Development Blog. However, it doesn’t mean we haven’t been developing new technologies and applications. In fact, there is so much new that I would want to write a post for each new project once it is possible, i.e., after Ukraine wins the war.