by Andrey Filippov

“Temporary diversion” that lasted for three years

Last years we were working on the multi-sensor cameras and optical parts of the cameras. It all started as a temporary diversion from the development of the model 373 cameras that we planned to use instead of our current model 353 cameras based on the discontinued Axis CPU. The problem with the 373 design was that while the prototype was assembled and successfully tested (together with two new add-on boards) I did not like the bandwidth between the FPGA and the CPU – even as I used as many connection channels between them as possible. So while the Texas Instruments DaVinci processor was a significant upgrade to the camera CPU power, the camera design did not seem to me as being able to stay current for the next 3-5 years and being able to accommodate new emerging (not yet available) sensors with increased resolution and frame rate. This is why we decided to put that design on hold being ready to start the production if our the number of our stored Axis CPU would fall dangerously low. Meanwhile wait for the better CPU/FPGA integration options to appear and focus on the development of the other parts of the system that are really important.

Last years we were working on the multi-sensor cameras and optical parts of the cameras. It all started as a temporary diversion from the development of the model 373 cameras that we planned to use instead of our current model 353 cameras based on the discontinued Axis CPU. The problem with the 373 design was that while the prototype was assembled and successfully tested (together with two new add-on boards) I did not like the bandwidth between the FPGA and the CPU – even as I used as many connection channels between them as possible. So while the Texas Instruments DaVinci processor was a significant upgrade to the camera CPU power, the camera design did not seem to me as being able to stay current for the next 3-5 years and being able to accommodate new emerging (not yet available) sensors with increased resolution and frame rate. This is why we decided to put that design on hold being ready to start the production if our the number of our stored Axis CPU would fall dangerously low. Meanwhile wait for the better CPU/FPGA integration options to appear and focus on the development of the other parts of the system that are really important.

Now that wait for the processor is nearly over and it seems to be just in time – we still have enough stock to be able to provide NC353 cameras until the replacement will be ready. I’ll get to this later in the post, and first tell where did we get during these 3 years.

(more…)

by Andrey Filippov

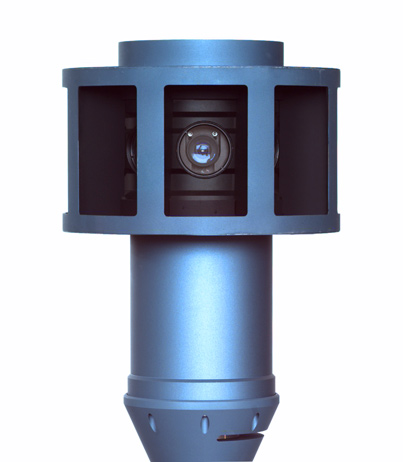

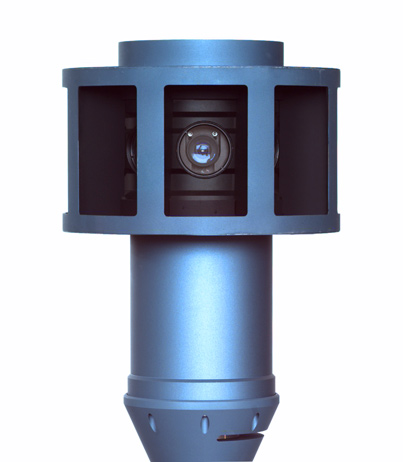

This is a long overdue post describing our work on the Eyesis4π camera, an attempt to catch up with the developments of the last half of a year. The design of the camera started a year before that and I described the planned changes from the previous model in Eyesis4πi post. Oleg wrote about the assembly progress and since that post we did not post any updates.

(more…)

by Andrey Filippov

Motivation

While working on the second generation of the Eyesis panoramic cameras, we decided to try go from capturing the series of the individual panoramic images to the 3d reconstruction. There are multiple successful implementations of such process, we just plan to achieve higher precision of capturing the 3d worlds using Elphel ability to design and build the hardware specific for such purpose. While most projects are designed to work with the standard off-the-shelf cameras, we are working on building the cameras together with the devices and methods for these cameras calibration. In order to be able to precisely determine the 3-d locations of the features registered with the cameras we plan first go as far as possible to precisely map each pixel of each sub-camera (of the composite camera) image to the ray in space. That would require at least two distinctive steps:

(more…)

by Andrey Filippov

UPDATE: The latest version of the page for comparing the results.

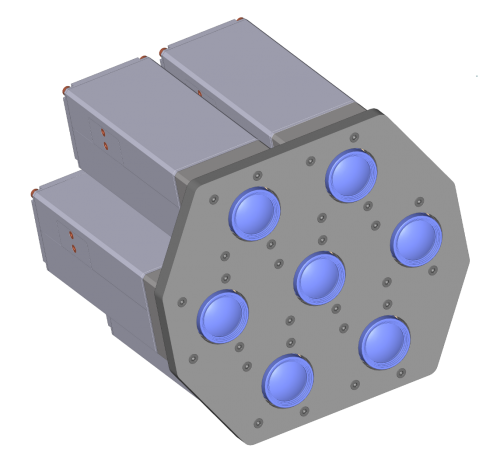

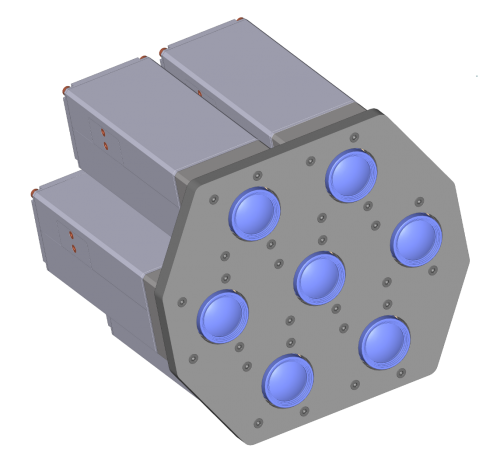

This is a quick update to the Zoom in. Now… enhance. – a practical implementation of the aberration measurement and correction in a digital camera post published last month. It had many illustrations of the image post-processing steps, but lacked the most important the real-life examples of the processed images. At that time we just did not have such images, we also had to find out a way to acquire calibration images at the distance that can be considered “infinity” for the lenses – the first images used a shorter distance of just 2.25m between the camera and the target, the target size was limited by the size of our office wall. Since that we improved software combining of the partial calibration images, software was converted to multi-threaded to increase performance (using all the 8 threads in the 4-core Intel i7 CPU resulted in approximately 5.5 times faster processing) and we were able to calibrate the two actual Elphel Eyesis cameras (only 8 lenses around, top fisheye is not done yet). It was possible to apply recent calibration data (here is a set of calibration files for one of the 8 channels) to the images we acquired before the software was finished. (more…)

This is a quick update to the Zoom in. Now… enhance. – a practical implementation of the aberration measurement and correction in a digital camera post published last month. It had many illustrations of the image post-processing steps, but lacked the most important the real-life examples of the processed images. At that time we just did not have such images, we also had to find out a way to acquire calibration images at the distance that can be considered “infinity” for the lenses – the first images used a shorter distance of just 2.25m between the camera and the target, the target size was limited by the size of our office wall. Since that we improved software combining of the partial calibration images, software was converted to multi-threaded to increase performance (using all the 8 threads in the 4-core Intel i7 CPU resulted in approximately 5.5 times faster processing) and we were able to calibrate the two actual Elphel Eyesis cameras (only 8 lenses around, top fisheye is not done yet). It was possible to apply recent calibration data (here is a set of calibration files for one of the 8 channels) to the images we acquired before the software was finished. (more…)

by Andrey Filippov

Deconvolved vs. de-mosaiced original

(more…)

by Andrey Filippov

Designing for low parallax

texture_tiles

When we started working on Eyesis project our first goal was to make the panoramic head as compact as possible to reduce parallax between sensors. That not only reduces the stitching artifacts but also decreases the minimal distance to object without dead zones between the individual camera.

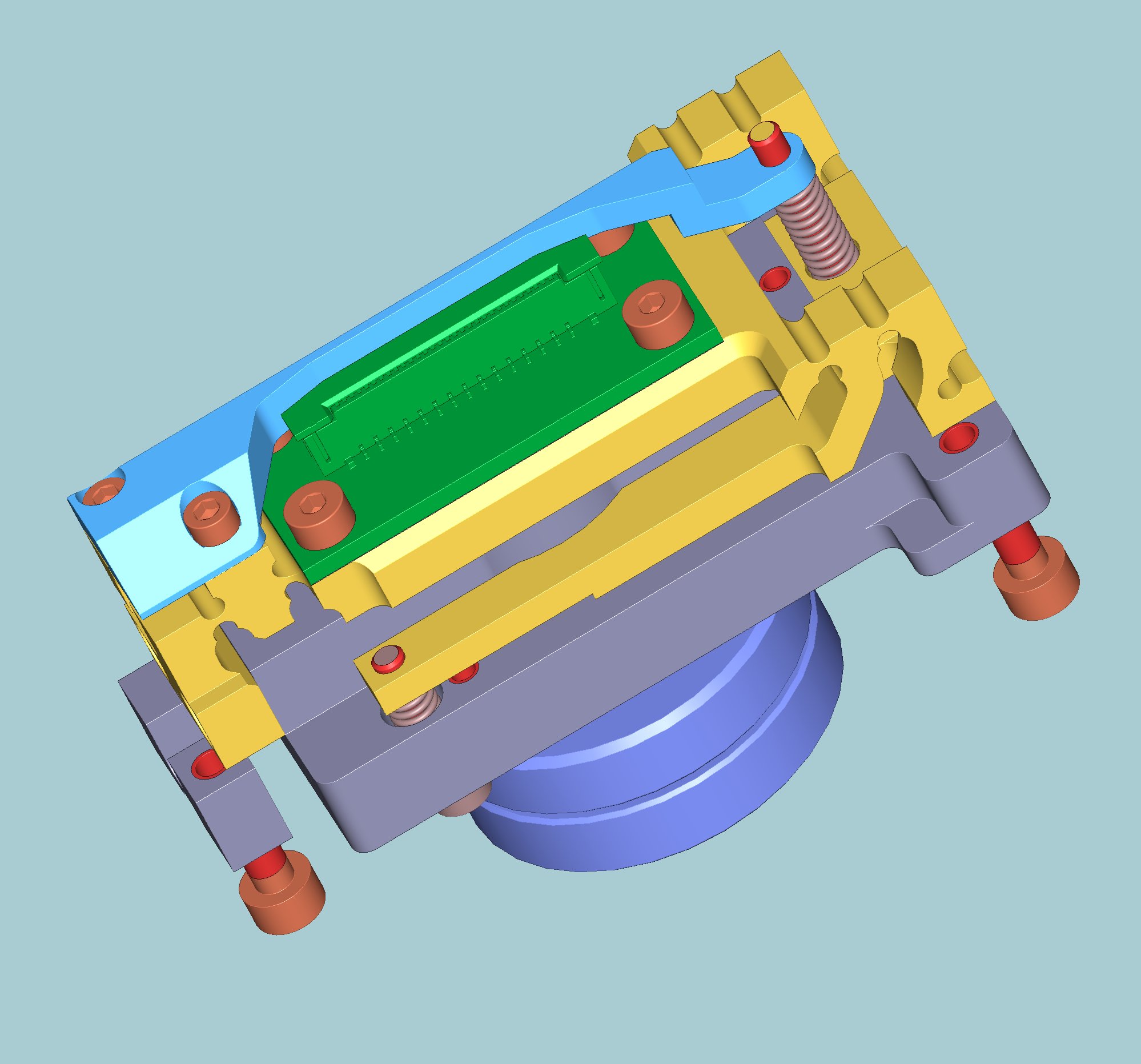

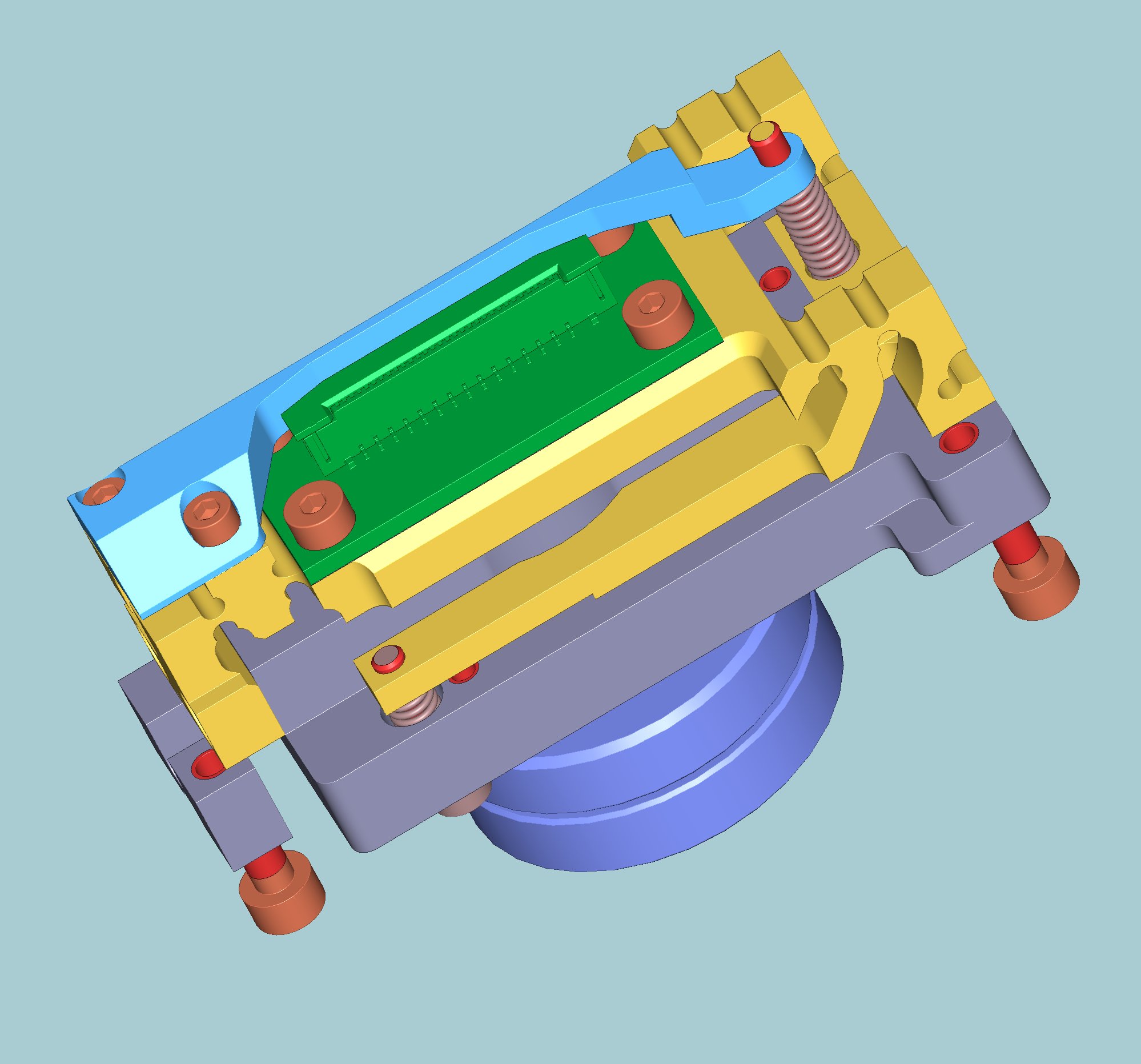

The first practical step was to reduce the PCB area around the sensors, especially in one direction, so multiple camera boards can be placed closer to each other, For that purpose we preserved the basic design of the proven 10338 sensor board, just changed the layout to make it more compact. The board 10338D is just 15mm wide – more than twice less than the older design.

The first practical step was to reduce the PCB area around the sensors, especially in one direction, so multiple camera boards can be placed closer to each other, For that purpose we preserved the basic design of the proven 10338 sensor board, just changed the layout to make it more compact. The board 10338D is just 15mm wide – more than twice less than the older design.

Next step was to run mechanical CAD program and try to place the boards and lenses. Most of the Elphel cameras were designed for the C/CS-mount lenses, but when I tried to place them I immediately found out that when using 10 or 8 cameras around even the C-mount thread (CS is the same size but even closer) will be the limiting factor, not the sensor PCB that we already made smaller.

(more…)

Last years we were working on the multi-sensor cameras and optical parts of the cameras. It all started as a temporary diversion from the development of the model 373 cameras that we planned to use instead of our current model 353 cameras based on the discontinued Axis CPU. The problem with the 373 design was that while the prototype was assembled and successfully tested (together with two new add-on boards) I did not like the bandwidth between the FPGA and the CPU – even as I used as many connection channels between them as possible. So while the Texas Instruments DaVinci processor was a significant upgrade to the camera CPU power, the camera design did not seem to me as being able to stay current for the next 3-5 years and being able to accommodate new emerging (not yet available) sensors with increased resolution and frame rate. This is why we decided to put that design on hold being ready to start the production if our the number of our stored Axis CPU would fall dangerously low. Meanwhile wait for the better CPU/FPGA integration options to appear and focus on the development of the other parts of the system that are really important.

Last years we were working on the multi-sensor cameras and optical parts of the cameras. It all started as a temporary diversion from the development of the model 373 cameras that we planned to use instead of our current model 353 cameras based on the discontinued Axis CPU. The problem with the 373 design was that while the prototype was assembled and successfully tested (together with two new add-on boards) I did not like the bandwidth between the FPGA and the CPU – even as I used as many connection channels between them as possible. So while the Texas Instruments DaVinci processor was a significant upgrade to the camera CPU power, the camera design did not seem to me as being able to stay current for the next 3-5 years and being able to accommodate new emerging (not yet available) sensors with increased resolution and frame rate. This is why we decided to put that design on hold being ready to start the production if our the number of our stored Axis CPU would fall dangerously low. Meanwhile wait for the better CPU/FPGA integration options to appear and focus on the development of the other parts of the system that are really important.