Elphel at Utah Open Source Conference 2009

A short break from the 373 camera development – Elphel presents our products and philosophy at UTOSC-2009

A short break from the 373 camera development – Elphel presents our products and philosophy at UTOSC-2009

There are several hundred of these to install…

There are several hundred of these to install…

The modes are:

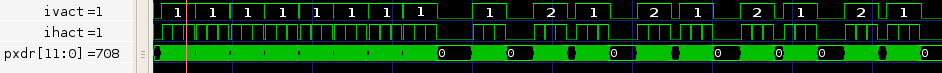

At first, I rewrote the logic of switching from what I already had and this resulted in parsedit.php “Error 500” and the streamer stop (when the sensors were in the free run mode, in triggered – everything was ok) while it was everything fine with the testbench. Coudn’t find what is wrong for sometime. Part of the logic was based on sync signals levels and under certain conditions the switching didn’t work – the error was corrected by using only the sync signals edges.

Fig.1 A sample frame in the testbench is 2592×3 (to reduce verification time), “ivact” – vertical sync signal, “ihact” – horizontal sync signal, “pxdr” – pixel data. White numbers in ‘ivact’ line represent an appropriate channel.

Fig.1 A sample frame in the testbench is 2592×3 (to reduce verification time), “ivact” – vertical sync signal, “ihact” – horizontal sync signal, “pxdr” – pixel data. White numbers in ‘ivact’ line represent an appropriate channel.

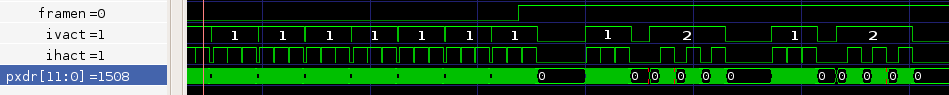

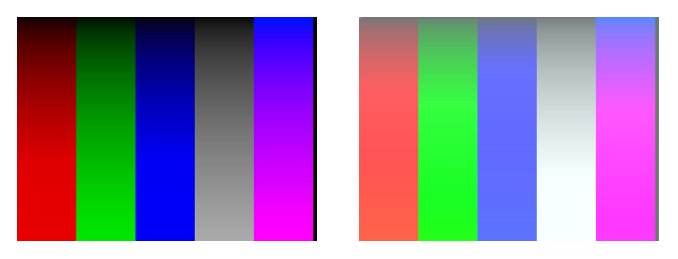

With this mode the situation was almost the same as with the previous mode – the same changes but the frame from the second channel, the one that is buffered looks brighter, but if to disable the first channel – the buffered frame is correct:

Fig.2 “framen” – frame enable register, allows work when ‘high’.

Fig.2 “framen” – frame enable register, allows work when ‘high’.

Fig.3 Good direct frame (left) and ‘whitened’ buffered frame (right). Probably some signal’s latencies are incorrect.

Is separate due to the problems with other two modes. It’s being added currently.

I’m also making the project less messy, optimizing registers addresses and rewriting scripts to make the work with 10359 easier.

10373A with BGA chips installed - fragment

And while I was waiting for the components and the board – I made some minor design upgrades to the existent camera – changed the layout of the sensor board to fit better multi-sensor applications (like panoramic video). The new board is just 15mm by 28mm (current 10338 is 32mm x 32mm), so using M12 (S-mount) lenses it is possible to shrink the size of the panoramic head so the distance between the outer elements of the opposite lenses is under 80mm – it is much smaller than similar high-res systems. That distance is important to reduce parallax – most noticable when the objects are close to the camera.

In September 2009 there were multiple publications about the Open Source Frankencamera developed at Stanford (Open-source camera could revolutionize digital photography). It is really nice to have more participants in the rather new area of the open hardware devices, we at Elphel are also excited about the possibilities of the hardware that users can accommodate to their needs without “jail-breaking” or otherwise modifying the firmware against the manufacturers’ intentions, often having to start over again after each firmware update (or hardware release) by the manufacturer, risking to “brick” their gadgets if something goes wrong in the process.

We believe that having the software and hardware designs “free” and “open” is the only sustainable way to deal with ever-increasing complexity of the designs. Model it after the human activity that proved to be capable of dealing with complex matters – modern science that replaced proprietary and secret knowledge of alchemy and like. This is why we applaud achievements in this area that we are working hard ourselves too.

It is important to have good cooperation in such development, sharing ideas, working together on common standards and API. As of today we are still in minority, traditional (proprietary) camera manufacturers are better organized, but the standards they develop are only sub-optimal for the open hardware.

There is no reference to Elphel (or other similar works) in the article itself, it appears in the Frequently asked questions about the Frankencamera. That page among other things compares their current Stanford OMAP-based version of the camera and Elphel prototype used during development:

Yes there is. In fact, our first Frankencamera prototype was built around an Elphel camera. However, these aren’t really standalone cameras. Once you add a viewfinder and power supply with batteries, you get an awkwardly sized and shaped device. More importantly you get high latency between the sensor and viewfinder. That’s why we switched to a board, based on the TI platform, that includes an LCD touchscreen.

That is correct, we do not have the viewfinder in Elphel cameras and it is not an omission – we try to focus on the core camera parts, leaving visualization to the off-the-shelf gadgets. We believe that others can create “mashups” with appropriate devices. There are many different applications that our users can think about customize our products themselves. In the model 373 we plan to have a second low-res output from the FPGA to simplify viewfinder functionality with low-power devices that fail to render the full resolution/frame rate camera output.

As for the latency – it is really small when using the appropriate video player. The in-camera latency is less than two frames (about 20 scanlines + 1000 pixels in the FPGA – from the sensor port to the compressed bitstream in the system memory buffer and one frame in the buffer before the frame is sent out). When using the MPlayer the host PC latency is also about just one frame that makes it possible to use the camera+PC in real time applications, like a replacement of a rear view mirror in Elphel mobile office

The article says that The imaging chip is taken from a Nokia N95 cell phone but it seems not to be complete. Yes, it may be (and likely is but I do not know for sure – N95 circuit diagram is not posted) the same imaging chip, but according to this posting the sensor front end board is really open source and you can download the documentation (released under GNU FDL license) from Elphel Wiki page

Update:Andrew, thank you for adding information to Frequently asked questions about the Frankencamera .

BTW – we were able to control Canon EOS lenses directly in our model 323C ("C" for "Canon"), we may still have some hardware around. And the code is at Sourceforge – lensraw.html , lensraw.c

I just finished working on a new graphical user interface in the general camera system preferences that should simplify the process of camera firmware upgrade also called “reflashing”. There are quite a few ways to update a cameras firmware so it might be challenging to keep a clear overview: http://wiki.elphel.com/index.php?title=Usage_Tutorials.

The basic idea is the same for all approaches:

The new GUI should do this in a tidy manner and verifies that the share is working correctly and that all required firmware files are valid before letting you press the Reflash button.

Notes:

There’s a nice page on Elphel’s wiki describes the problem: Adjusting sensor clock phase (the last paragraph is outdated, the script is in the ‘attic’, and I couldn’t find an alternative in the new 8.x firmware).

It was difficult to adjust data phases for long cables (& sometimes even for short) connecting sensor boards with 10359. To do it in a more or less automatic way a php script ‘test_inner_10359.php’ is used, which in its turn uses ‘phases_adjust.php’ with 5 phase steps at a time and compares the MD5 sums of the frames with the known one at defined sensor parameters.

So far, the solution is:

TODO:

HOWTO:

PS: Also tried to add a bit mask to data bus from the sensor just to find out whether the data bus changing time exceeds the period but the picture became unstable every time the phase was close to the correct one.

So far, the code works in the following way:

So, the ‘depth frame’ goes through the 353’s compressor and this makes the data available only for visual analysis. I’m planning:

Shall I keep the whole frame? shall I write it to the SDRAM? I’ll use 1 BRAM at first. Section 1 & 2 w/o ‘frames from the left/right sensor‘ will take less then an hour.

Spent couple days looking if the 10373 board layout can be connected as I hoped. There will be small board with 2 connectors on the back panel (103732) – micro USB and eSATA. In minimal configuration it will be a passive board with connectors only, but there should be some room to add USB hub and a couple internal 10-conductor flex cables for internal USB devices – same as on the 10369 board of the NC353L camera.

SATA and USB signals are split into separate connectors on the 10373 board – that allows to leave USB connected to the back panel adapter while connecting the SATA cable (with additional power) to the internal adapter for the SATA SSD. The image below shows both options, but actually 10373 has only one SATA port so only one SATA device can be connected.

While most functionality of the 10369 board is moved to the main 10373 there is no ports for the inter-camera synchronization – 10369 has 2 sets of oto-isolated synchronization ports – “external” with modular (like telephone) connector for frame-locking several individual cameras an “internal” (with flex cable connectors) for synchronizing multiple camera boards installed in the same enclosure. The synchronization will have to be handled by an extension board that has direct connection to the 18 FPGA I/O pins. The back panel will have holes (covered with plastic overlay when not used) for up to four 2.5mm TRS connectors (like small stereo audio ones). Unfortunately even small 2.5mm TRS plugs plastic bodies are rather large so 2 of them can fit only when connectors are diagonal.. Anyway – that part of the design is not finished yet, we’ll think of something.

Andrey