December 20, 2017

by Oleg Dzhimiev

We have updated the Yocto build system to Poky Rocko released back in October. Here’s a short summary table of the updates:

|

before |

after |

| Poky |

2.0 (Jethro) |

2.4 (Rocko) |

| gcc |

5.3.0 |

7.2.0 |

| linux kernel |

4.0 |

4.9 |

Other packages got updates as well:

- apache2-2.4.18 => apache2-2.4.29

- php-5.6.16 => php-5.6.31

- udev-182 changed to eudev-3.2.2, etc.

This new version is in the rocko branch for now but will be merged into master after some transition period (and the current master will be moved to jethro branch). Below are a few tips for future updates.

(more…)

December 13, 2017

by Olga Filippova

MNC393-XCAM partial assembly and parts

The long anticipated parts for the Long range camera have arrived!

The mechanical parts for the MNC393-XCAM – Long Range Multi-view Stereo Camera are machined, tested, and ready to be anodized. This enables us to have the X-camera assembled before the winter holidays. The holiday break will provide a good opportunity to test the camera, capture new photos, and create robust 3D models from calibrated images.

The titanium X-frame of the camera ensures thermal stability required for continuous accuracy of 3D measurements. The aluminum enclosure and sealed lens filters weatherproof the system allowing for the proposed outdoor use of the camera.

We intend to assemble two cameras: one with a 150 mm distance between the sensors and another with a longer baseline. The expected accuracy for the camera with the shorter baseline is greater than 10% at a 200 meter distance. We have achieved 10% accuracy with H-camera with calibrated sensors, even though the 3D-printed parts were not thermally stable and some error was accumulated over time. It was a very pleasant surprise that the software was still able to deal with somewhat un-calibrated images and detect distances very accurately, creating impressive 3D-scenes: Scene_viewer The second camera will have a 280 mm distance between sensors, which is determined by the longest FPC cables we can use without signal losses. It promises to double the measured distance with the same degree of accuracy, therefore an extremely long range 3D-scenes will be produced.

The Long Range Multi-View Stereo Camera with 4 sensors MNC393-XCAM is planned for release in early 2018.

November 22, 2017

by Andrey Filippov

Elphel uses embedded GNU/Linux distribution based on Yocto. For most of our development (excluding just mechanical and PCB design) we use universal Eclipse IDE: for FPGA development, Linux kernel drivers development, embedded applications and web applications, for editing LaTeX texts. And we use this popular IDE for delivering pre-configured projects to our users to make it easier for them to start efficient modification of the initial camera software and then initiate the new projects.

(more…)

September 25, 2017

by Paulina Filippova and Fyodor Filippov

Setting 3D camera on the rock at Cape Alava

Testing 3D camera

on a road trip

In August of 2017, my family and I went on a trip to the Pacific Northwest, partially for a much needed vacation, but equally as importantly, to test my dad’s new 3D camera. My dad had been designing calibrated multi-sensor cameras for as long as I can remember, and since February was working determinately on developing principally new algorithms for reconstructing a 3D model from a set of 4 simultaneously taken photographs . Now that the camera and the software were ready, there was no better time to test it.

(more…)

September 20, 2017

by Andrey Filippov

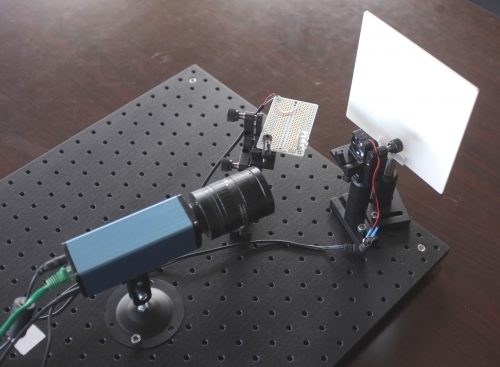

Figure 1. Four sensor stereo camera

Scroll down or just hyper-jump to Scene viewer for the links to see example images and reconstructed scenes.

(more…)

January 19, 2017

by Andrey Filippov

Fig.1. Image comparison of the different processing stages output

Results of the processing of the color image

Previous blog post “Lens aberration correction with the lapped MDCT” described our experiments with the lapped MDCT[1] for optical aberration corrections of a single color channel and separation of the asymmetrical kernel into a small asymmetrical part for direct convolution and a larger symmetrical one to be applied in the frequency domain of the MDCT. We supplemented this processing chain with additional steps of the image conditioning to evaluate the overall quality of the of the results and feasibility of the MDCT approach for processing in the camera FPGA.

Image comparator in Fig.1 allows to see the difference between the images generated from the results of the several stages of the processing. It makes possible to compare any two of the image layers by either sliding the image separator or by just clicking on the image – that alternates right/left images. Zoom is controlled by the scroll wheel (click on the zoom indicator fits image), pan – by dragging.

Original image was acquired with Elphel model 393 camera with 5 Mpix MT9P006 image sensor and Sunex DSL227 fisheye lens, saved in jp4 format as a raw Bayer data at 98% compression quality. Calibration was performed with the Java program using calibration pattern visible in the image itself. The program is designed to work with the low-distortion lenses so fisheye was a stretch and the calibration kernels near the edges are just replicated from the ones closer to the center, so aberration correction is only partial in those areas.

First two layers differ just by added annotations, they both show output of a simple bilinear demosaic processing, same as generated by the camera when running in JPEG mode. Next layers show different stages of the processing, details are provided later in this blog post.

(more…)

December 17, 2016

by Andrey Filippov

As we finished with the basic camera functionality and tested the first Eyesis4π built with the new 10393 system boards (it is smaller, requires less power and, is faster) we are moving forward with the in-camera image processing. We plan to combine our current camera calibration methods that require off-line post processing and the real-time image correction using the camera own FPGA resources. This project development will require switching between the actual FPGA coding and the software implementation of the same algorithms before going to the next step – software is still easier to design. The first part was in FPGA realm – it was to implement the fundamental image processing block that we already know we’ll be using and see how much of the resources it needs.

DCT type IV as a building block for in-camera image processing

We consider a small (8×8 pixel) DCT-IV to be a universal block for conditioning of the raw acquired images. Such operations as lens optical aberrations correction, color conversion (de-mosaic) in the presence of the lateral chromatic aberration, image rectification (de-warping) are easier to perform in the frequency domain using convolution-multiplication property and other algorithms.

In post-processing we use DFT (Discrete Fourier Transform) over rather large (64×64 to 512×512) tiles, but that would be too much for the in-camera processing. First is the tile size – for good lenses we do not need that large convolution kernels. Additionally we plan to combine several processing steps into one (based on our off-line post-processing experience) and so we do not need to sub-sample images – in our current software we double resolution of the raw images at the beginning and scale back the final result to reduce image degradation caused by re-sampling.

The second area where we plan to reduce computations is the replacement of the DFT with the DCT that is designed to be fed with the pure real data and so requires less arithmetic operations than DFT that processes complex input values.

Why “type IV” of the DCT?

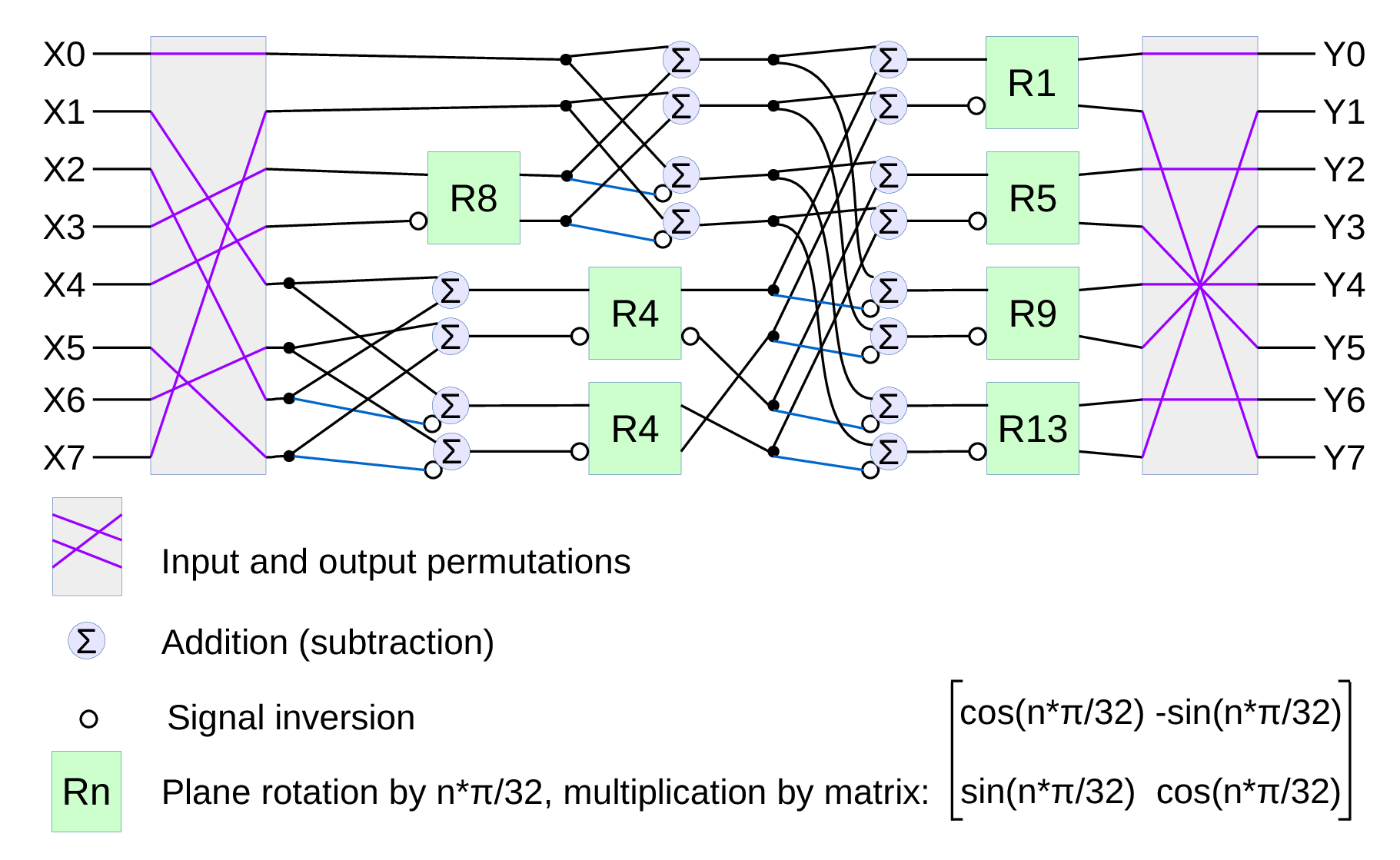

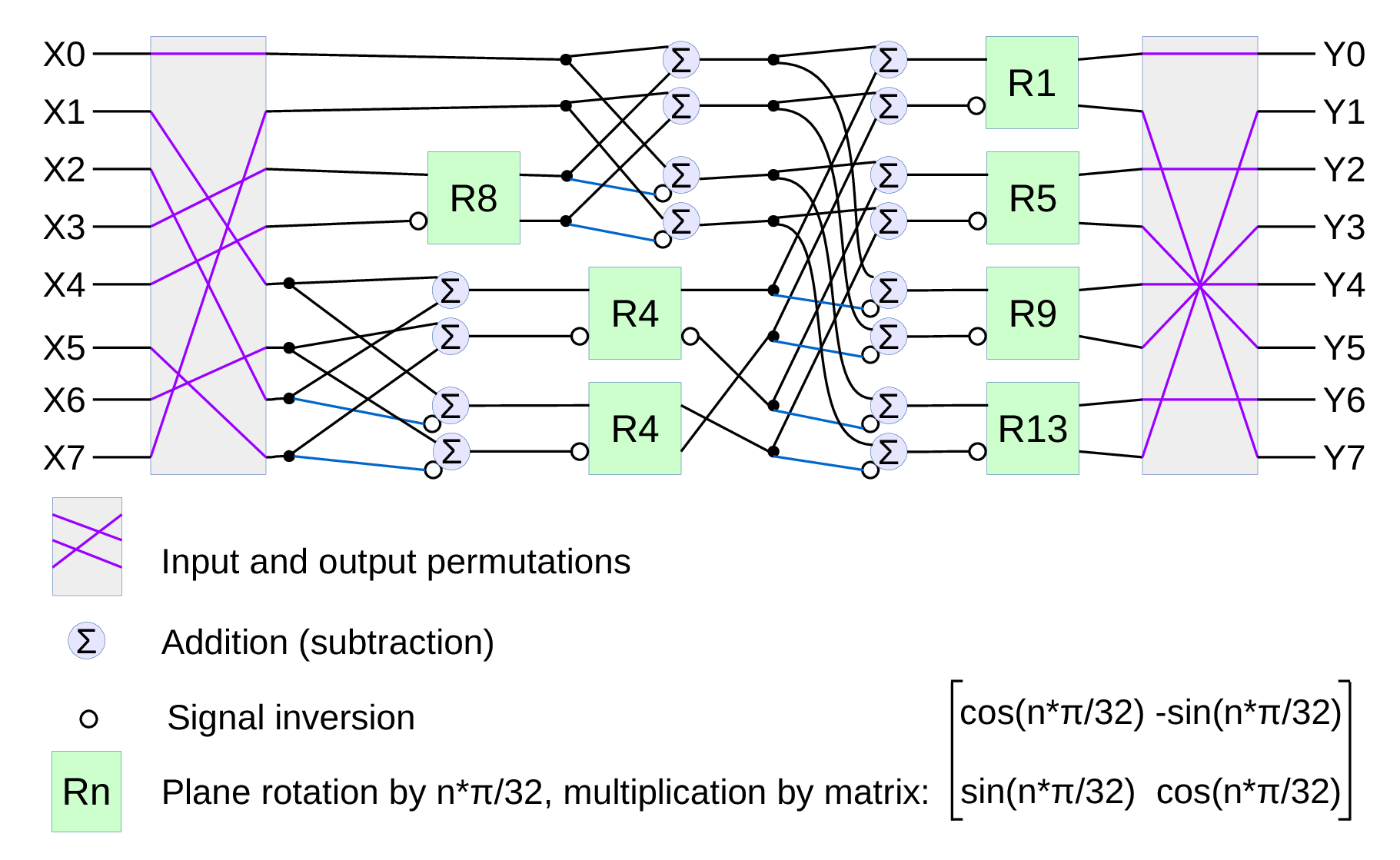

Fig.1. Signal flow graph for DCT-IV

We already have DCT type II implemented for the JPEG/JP4 compression, and we still needed another one. Type IV is used in audio compression because it can be converted to a modified discrete cosine transform (MDCT) – a procedure when multiple overlapped windows are processed one at a time and the results are seamlessly combined without any block artifacts that are familiar for the JPEG with low settings of the compression quality. We too need lapped transform to process large images with relatively small (much smaller than the image itself) convolution kernels, and DCT-IV is a perfect fit. 8-point DCT-IV allows to implement transformation of 16-point segments with 8-point overlap in a reversible manner – the inverse transformation of 8-point data may be converted to 16-point overlapping segments, and being added together these segments result in the original data.

(more…)

October 24, 2016

by Oleg Dzhimiev

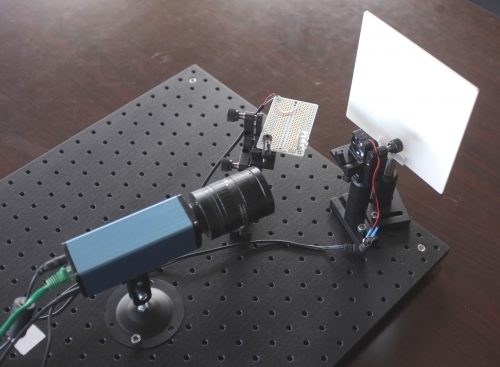

Operation modes in conventional CMOS image sensors with the electronic rolling shutter

Flash test setup

Most of the CMOS image sensors have Electronic Rolling Shutter – the images are acquired by scanning line by line. Their strengths and weaknesses are well known and extremely wide usage made the technology somewhat perfect – Andrey might have already said this somewhere before.

There are CMOS sensors with a Global Shutter BUT (if we take the same optical formats):

- because of more elements per pixel – they have lower full well capacity and quantum efficiency

- because analog memory is used – they have higher dark current and higher shutter ratio

Some links:

So, the typical sensor with ERS may support 3 modes of operation:

- Electronic Rolling Shutter (ERS) Continuous

- Electronic Rolling Shutter (ERS) Snapshot

- Global Reset Release (GRR) Snapshot

GRR Snapshot was available in the 10353 cameras but ourselves we never tried it – one should have write directly to the sensor’s register to turn it on. But now it is tested and working in 10393s available through the TRIG (0x14) parameter.

(more…)

October 2, 2016

by Olga Filippova

On October 8th, 2016 Andrey will be presenting his work on VDT – Free Software Environment for FPGA Development at an open source digital design conference, ORCONF 2016. ORCONF 2016

The conference will take place in Bologna, Italy, and we are glad for the possibility to meet some of European users of Elphel cameras, and to connect with the community of developers excited about open source design, free software and open hardware.

Elphel will be present at the conference by Andrey Filippov from USA headquarters and Alexadre Poltorak, founder of Swiss 3D4Pi mobile mapping company, working closely with Elphel to integrate Eyesis4Pi, stereophotogrammetric camera, for the purpose of image based 3D reconstruction applications. Andrey will bring and demonstrate the new multisensor NC393 H-camera and Alexandre plans to take some panoramic footage with Eyesis4Pi camera, while in Bologna.

September 19, 2016

by Andrey Filippov

Since we started to deliver first NC393 series cameras in May we were working on the cameras software – original version was rather limited. While it was capable of serving images/video over the network and recording them on the internal m.2 SSD, it did not have the advanced image acquisition control (through the GUI and programmatically) that was standard for the earlier NC353 series. Now the core functionality is operational and in a month we plan to have the remaining parts (inter-camera synchronization, working with multiple sensors per-port with 10359 multiplexer, GPS+IMU logging) online too. FPGA code is already ported, but it needs to be tested and a fair amount of troubleshooting, identifying the problems and weeding out the bugs is still left to be done.

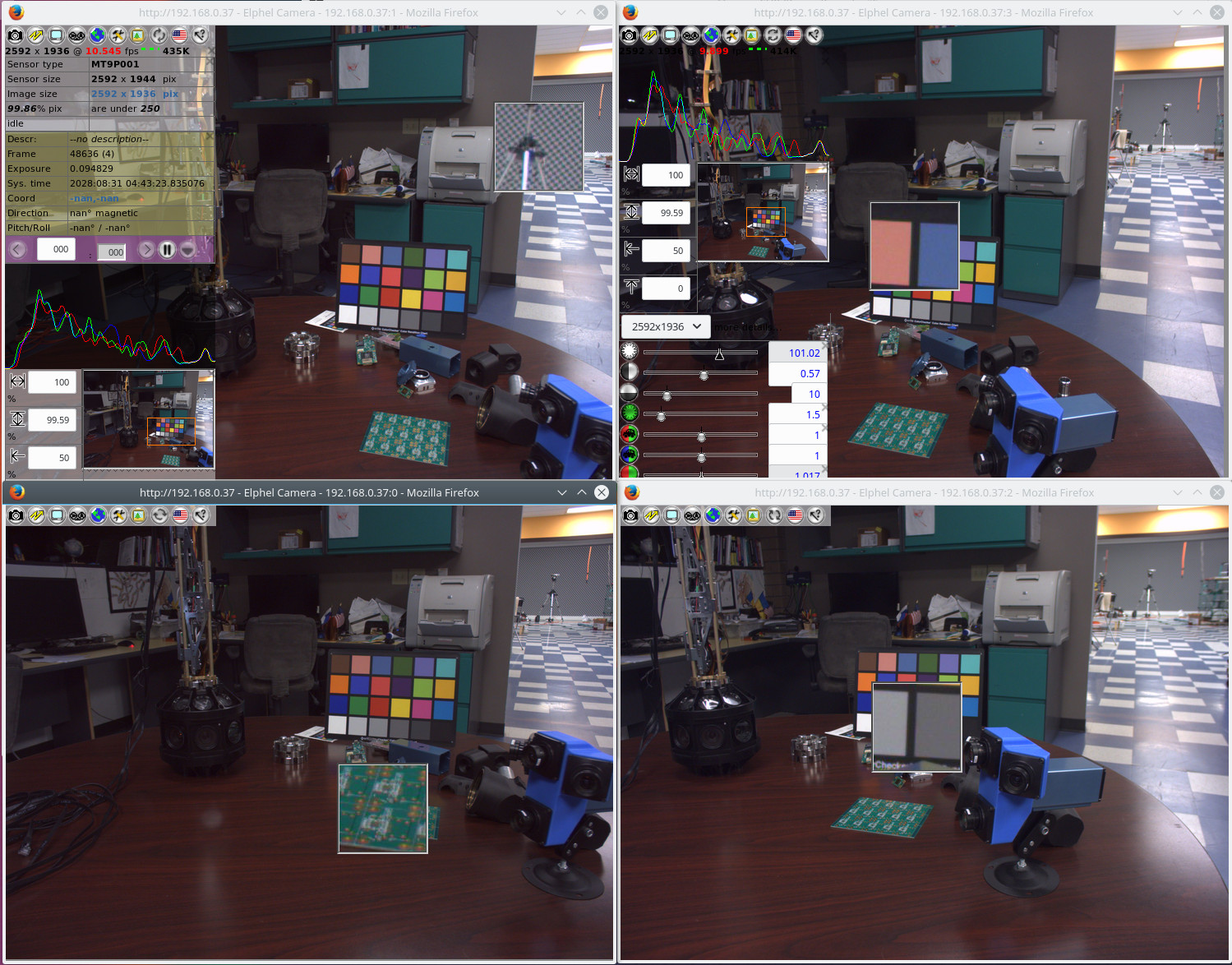

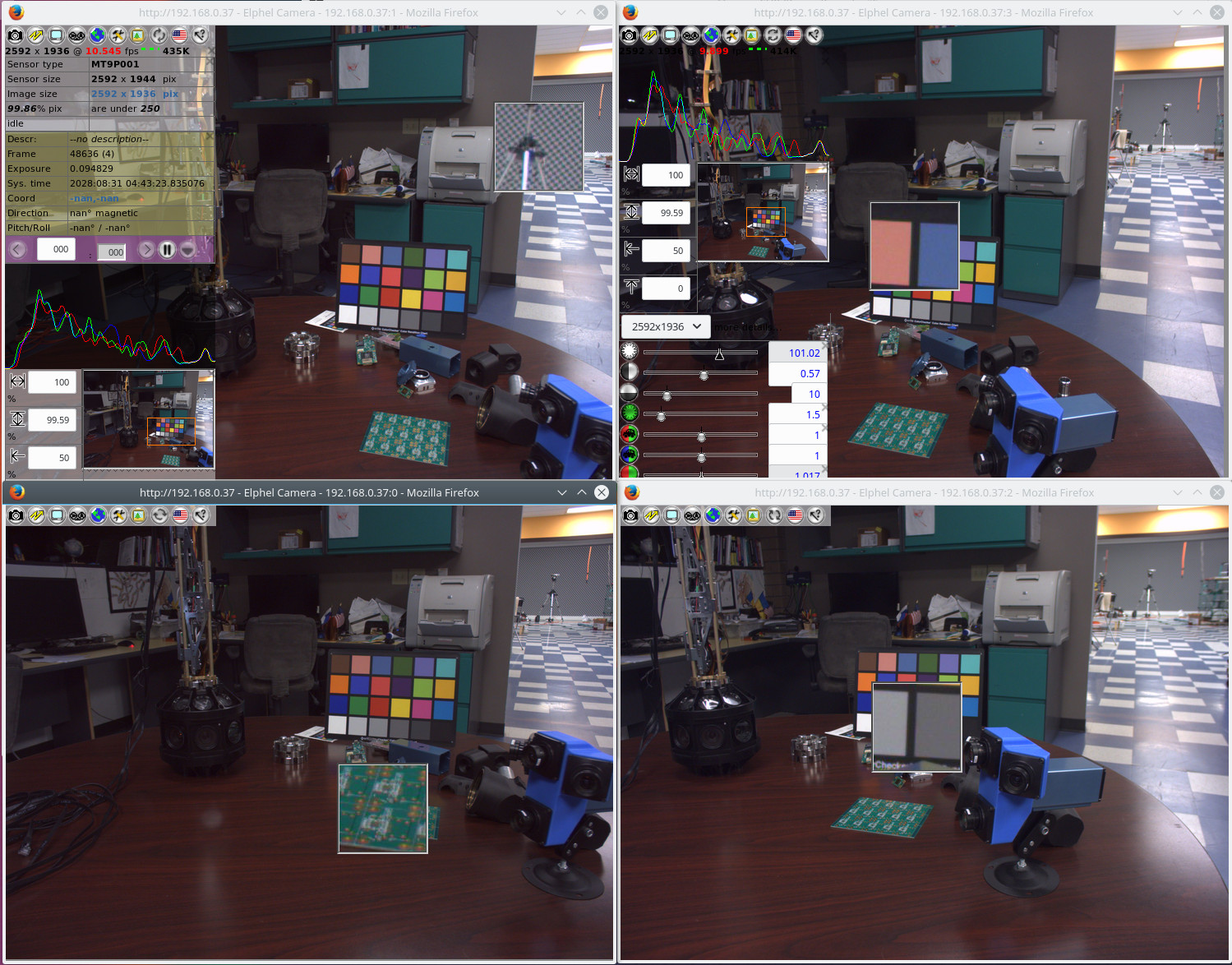

Fig 1. Four camvc instances for the four channels of NC393 camera

Users of earlier Elphel cameras can easily recognize familiar camvc web interface – Fig. 1 shows a screenshot of the four instances of this interface controlling 4 sensors of NC393 camera in “H” configuration.

(more…)

« Previous Page —

Next Page »