Reaching 220 MB/s sustained write speed with SATA-2 controller

Introduction

Elphel cameras use camogm, a user space application, for recording acquired images to a disk storage. The application is developed to use such storage devices as disk drives or USB drives mounted in the operating system. The Elphel393 model cameras have SATA-2 controller implemented in FPGA, a system driver for this controller, and they can be equipped with an SSD drive. We were interested in performing write speed tests using the SATA controller and a couple of M.2 SSDs to find out the top disk bandwidth camogm can use during image recording. Our initial approach was to try a commonly accepted method of using hdparm and dd system utilities. The first disk was SanDisk SD8SMAT128G1122. According to the manufacturer specification [pdf], this is a low power disk for embedded applications and this disk can show 182 MB/s sequential write speed in SATA-3 mode. We had the following:

|

1 2 3 4 5 6 7 8 9 10 11 |

~# hdparm -t /dev/sda2 /dev/sda2: Timing buffered disk reads: 274 MB in 3.02 seconds = 90.70 MB/sec ~# time sh -c "dd if=/dev/zero of=/dev/sda2 bs=500M count=1 && sync" 1+0 records in 1+0 records out real 0m6.096s user 0m0.000s sys 0m5.860s |

which results in total write speed around 82 MB/s.

The second disk was Crusial CT250MX200SSD6 [pdf] and its sequential write speed should be 500 MB/s in SATA-3 mode. We had the following:

|

1 2 3 4 5 6 7 8 9 10 11 |

~# hdparm -t /dev/sda2 /dev/sda2: Timing buffered disk reads: 236 MB in 3.01 seconds = 78.32 MB/sec ~# time sh -c "dd if=/dev/zero of=/dev/sda2 bs=500M count=1 && sync" 1+0 records in 1+0 records out real 0m6.376s user 0m0.010s sys 0m5.040s |

which results in total write speed around 78 MB/s. Our preliminary tests had shown that the controller can achieve 200 MB/s write speed. Taking this into consideration, the performance figures obtained were not very promising, so we decided to add one new feature in the latest version of camogm – the ability to write data to a raw storage device. Raw storage device is a disk or a disk partition with direct access to hardware bypassing any operating system caches and buffers. Such type of access can potentially improve I/O performance but requires additional efforts to implement data management in software.

First approach

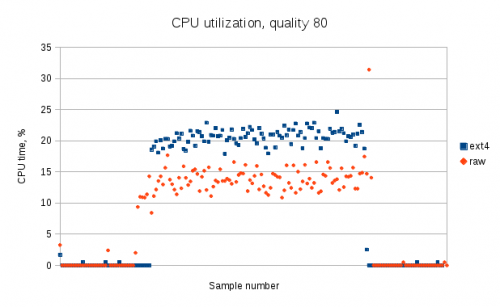

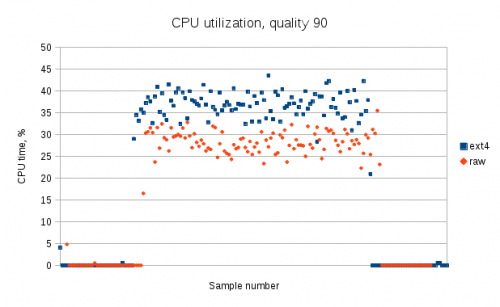

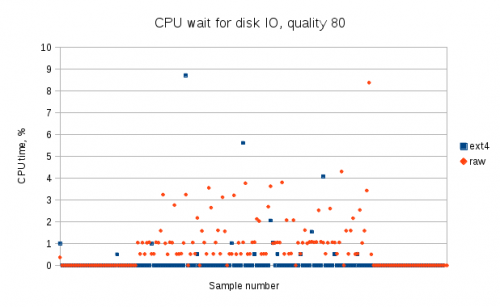

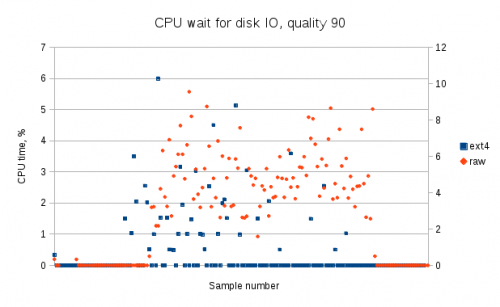

We tried to bypass file system in the first attempt and used device file (/dev/sda in our case) in camogm for I/O operations. We compared CPU load and I/O wait time during write operation to a partition with ext4 file system and to a device file. dstat turned to be a very handy tool for generating system resource statistics. The statistics were collected during 3 periods of operation: in idle mode before writing, during writing, and in idle mode after writing. All these periods can be clearly seen on the figures below. We also changed the quality parameter which affects the resulting size of JPEG files. Files with quality parameter set to 80 were around 1 MB in size and files with quality parameter set to 90 were almost 2 MB in size.

As expected, the figures show that device file write operation takes less CPU time than the same operation with file system, because there no file system operations and caches involved.

CPU wait for disk IO on the figures means the amount of time in percent the CPU waits for an I/O operation to complete. Here camogm process spends more CPU time waiting for data to be written during device file operations than during file system operations, and again this could be explained by the fact that caching on the file system level in not used.

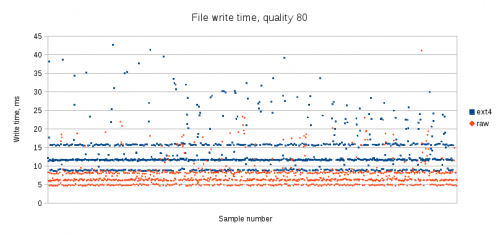

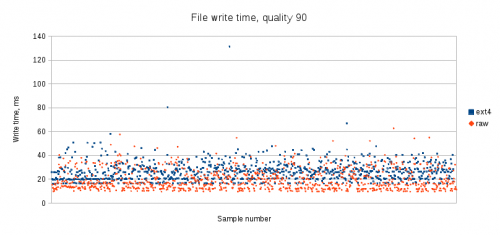

We also measured the time camogm spent on writing each individual file to device file and to files on ext4 file system.

The clear patterns on the figures correspond to several sensor channels used during recording and each channel produced JPEG files different in size from the other channels. As we have already seen, file system caching has its influence on the results and the difference in overall write time becomes less obvious when the size of files increases.

Although the tests had shown that writing data to file system and to device file had different overall performance, we could not achieve any significant performance gain which would narrow the gap between initial results and preliminary write speed data. We decided to try another approach: only pass commands to disk driver and write data from disk driver.

Second approach

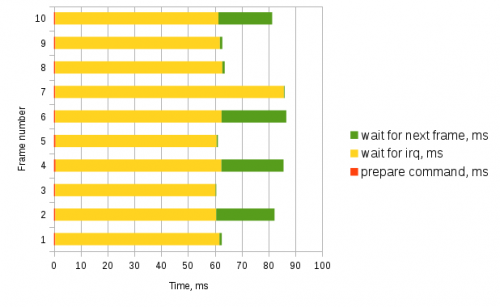

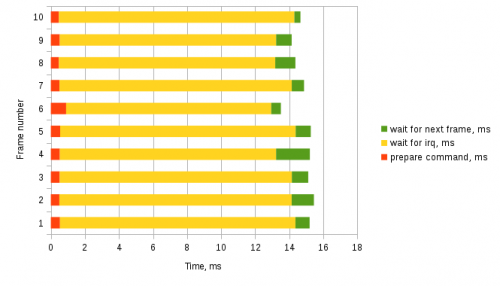

The idea behind this approach was simple. We already have JPEG data in circular buffer in memory and disk driver only needs pointers to the data we want to write at any given moment in time. camogm was modified to pass those pointers and some meta information to driver via its sysfs interface. We modified our AHCI driver as well to add new functions. The driver accepts a command from camogm, aligns data buffers to a predefined boundary and a frame in total to a physical sector boundary, and places the command to command queue. Commands are picked from the command queue right after current disk transaction is complete. We measured the time spent by driver preparing a new command, waiting for an interrupt after a command had been issued, and waiting for a new command to arrive. Total data size per each transaction was around 9.5 MB in case of SD8SMAT128G1122 and around 3 MB in case of CT250MX200SSD6. The disks were installed in cameras with 14 Mpx and 5 Mpx sensors respectively.

These figures show that the time spent in the driver on command preparation is almost negligible in comparison to the time spent waiting for the write command to complete and this was exactly what we finally wanted to get. We could achieve almost 160 MB/s write speed for SD8SMAT128G1122 and around 220 MB/s for CT250MX200SSD6. Here is a summary of results obtained in different modes of writing for two test disks:

| Disk | File system access | Device file access | Raw driver access |

|---|---|---|---|

| SD8SMAT128G1122 | 82 MB/s | 90 MB/s | 160 MB/s |

| CT250MX200SSD6 | 78 MB/s | – | 220 MB/s |

CT250MX200SSD6 was not tested in device file access mode as it was clear that this method did not fit our needs.

Disk access sharing

One of the problems we had to solve while working on the driver was disk access sharing from operating system and from driver during recording. The disk in camera had two partitions, one was formatted to ext4 file system and mounted in operating system and the other was used as a data buffer for camogm. It is possible that some user space application could access mounted partition when camogm is writing data to disk data buffer and this situation should be correctly processed. camogm as a top priority process should always have the full disk bandwidth and other system processes should be granted access only during periods of time when camogm is waiting for the next frame. libata has built-in command deferral mechanism and we used this mechanism in the driver to decide whether the system process should have access to disk or the command should be deferred. To use this mechanism, we added our function to ATA port operations structure:

|

1 2 3 4 |

static struct ata_port_operations ahci_elphel_ops = { ... .qc_defer = elphel_qc_defer, }; |

This function is called every time a new system command arrives and the driver can defer the command in case it is busy writing data.

Leave a Reply