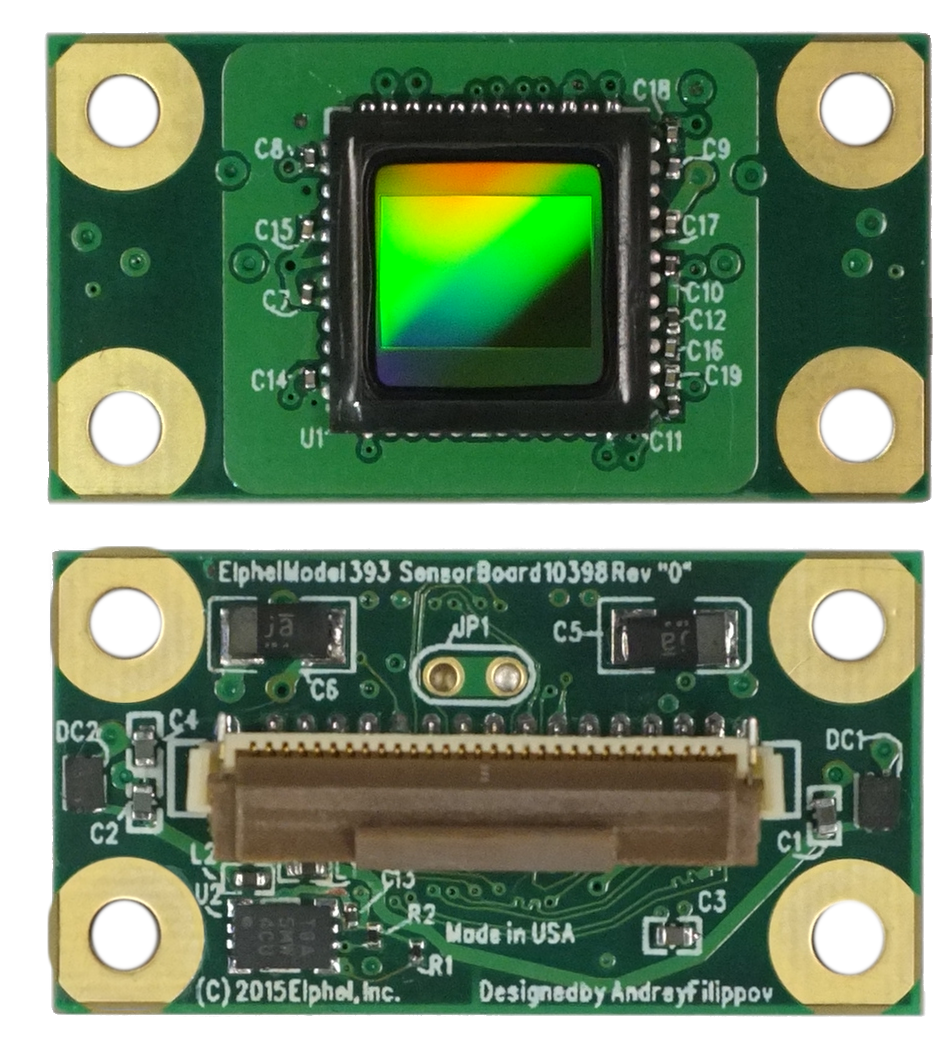

NC393 progress update: 14MPix Sensor Front End is up and running

10398 Sensor Front End with 14MPix MT9F002

Sensors (ON Semiconductor MT9F002) and blank PCBs arrived in time and so I was able to hand-assemble two 10398 boards and start testing them. I had some minor problems getting data output from the first board, but it turned out to be just my bad soldering of the sensor, the second board worked immediately. To my surprise I did not have any problems with HiSPi decoder that I simulated using the sensor model I wrote myself from the documentation, so the color bar test pattern appeared almost immediately, followed by the real acquired images. I kept most of the sensor settings unmodified from the default values, just selected the correct PLL multiplier, output signal levels (1.8V HiVCM – compatible with the FPGA) and packetized format, the only other registers I had to adjust manually were exposure and color analog gains.

As it was reasonable to expect, sensitivity of the 14MPix sensor is lower than that of the 5MPix MT9P006 – our initial estimate is that it is 4 times lower, but this needs more careful measurements to find out exposure required for pixel saturation with the same illumination. Analog channel gains for both sensors we set slightly higher than minimal ones for the saturation, but such rough measurements could easily miss a factor of 1.5. MT9F002 offers more controls over the signal chain gains, but any (even analog) gain in the chain that boosts signal above the minimal needed for saturation proportionally reduces used “well capacity”, while I expect the Full Well Capacity (FWC) is already not very high for the 1.4μm x1.4 μm pixel sensor. And decrease in the number of electrons stored in a pixel accordingly increases the relative shot noise that reveals itself in the highlight areas. We will need to accurately measure FWC of the MT9F002 and have better sensitivity comparison, including that of the binned mode, but I expect to find out that 5MPix sensor are not obsolete yet and for some applications may still have advantages over the newer sensors.

Both sensors used identical f=4.5mm F3.0 lenses, the 5MPix one lens is precisely adjusted during calibration, the lens of the 14MPix sensor is just attached and focused by hand using the lens thread, no tilt correction was performed. Both images are saved at 100% JPEG quality (lossless compression) to eliminate compression artifacts, both used in-camera simple 3×3 demosaic algorithm. The 14 MPix image has visible checkerboard pattern caused by the difference of the 2 green values (green in red row, and green in the blue row). I’ll check that it is not caused by some FPGA code bug I might introduce (save as raw image and do de-bayer on a host computer), but it may also be caused by pixel cross-talk in the sensor. In any case it is possible to compensate or at least significantly reduce in the output data.

MT9F002 transmits data over 5 differential 100Ω pairs: 1 clock pair and 4 data lanes. For the initial tests I used our regular 70mm flex cable used for the parallel interface sensors, and just soldered 5 of 100Ω resistors to the contacts at the camera side end. It did work and I did not even have to do any timing adjustments of the differential lanes. We’ll do such adjustments in the future to get to the centers of the data windows – both the sensor and the FPGA code have provisions for that. The physical 100Ω load resistors were needed as it turned out that Xilinx Zynq has on-chip differential termination only for the 2.5V (or higher) supply voltages on the regular (not “high performance”) I/Os and this application uses 1.8V interface power – I missed this part of documentation and assumed that all the differential inputs have possibility to turn on differential termination. 660 Mbps/lane data rate is not too high and I expect that it will be possible to use short cables with no load resistors at all, adding such resistors to the 10393 board is not an option as it has to work with both serial and parallel sensor interfaces. Simultaneously we designed and placed an order for dedicated flex cables 150mm long, if that will work out we’ll try longer (450mm) controlled impedance cables.

This 12mp sensor looks fantastic ( besides this checkerboard pattern that you pointed out) congratulations it looks fantastic!

is the jpeg jp4 format still being used for raw?

how different is the method to control the camera from a second computer compared to the nc353?

could this 12mp sensor be pixelbinned (summing) until we reach HD resolution in order to gain light to compensate this loss of electrons reaching the full well capacity too early?

This sensor has a full datasheet available online – http://www.onsemi.com/pub_link/Collateral/MT9F002-D.PDF , and yes – it has multiple binning and scaling options that we had not tried yet – at this point we are more concerned with general hardware compatibility, and will likely test some newer sensors of the same 1/2.3″ format (so compatible with our calibrated SFE/optics).

The camera control is now very basic – I was working on the FPGA code, so no advanced drivers yet – just a big Python application that communicates with FPGA. Later the camera control will have similar features, the new camera is also based on command sequencers as the NC353. It will be more Python-centric (instead of PHP).

JP4 – yes, it will definitely stay as the main image format used for the serious image post-processing.

Andrey,

You can try to do termination by dirty temporally hack on 1.8V side:

use differential SSTL with UNTUNED_SPLIT_50 flag.

Konstantin,

Thank you. I’ll try that – seems it should work.