Interfacing Elphel cameras with GStreamer, OpenCV, OpenGL/GLSL and python. Create custom video processing plugins and get profit of DSP and GPU based optimization.

Introduction:

The aim of this article is to describe how to use Elphel cameras with GStreamer from simple pipeline to a complex python software integration using OpenCV and other optimized video processing blocks. All the examples on this page are Free Software and are available as templates for your own application. Most of the described and linked software are available under GNU GPL, GNU LGPL and BSD-like licenses.

I demonstrate the use of the GStreamer framework with Elphel NC353L cameras series, but most of the examples can be used with (or without) any camera by replacing the RTSP source by a v4l2, dv, gnomevfs, videotest, gltest or file source.

The GStreamer framework itself, as most of the modules, is available under the GNU LGPL license, thuse it is perfectly suitable for both Free Software and proprietary product integration.

About GStreamer:

GStreamer is a multimedia framework based on a pipeline concept. Designed to be cross-platform, it is known to work on GNU/Linux (x86, PowerPC and ARM), Android (OMAP3), Solaris (Intel and SPARC), Mac OS X and Microsoft Windows. GStreamer has bindings for programming-languages like Python, C++, Perl, GNU Guile and Ruby. Gstreamer is a free software, licensed under the GNU Lesser General Public License.

GStreamer is widely used by various corporations (Nokia, Motorola, Texas Instruments, Freescale, Google, and many more), Free Software & Open Source communities and has become a very powerful cross-platform multimedia framework.

GStreamer uses a plugin architecture, which makes the most of GStreamer’s functionality implemented as shared libraries. GStreamer’s base functionality contains functions for registering and to load plug-ins as well as to provide the base classes. Plugin libraries are dynamically loaded to support a wide spectrum of codecs, container formats, input/output drivers and effects.

Plug-ins can be installed semi-automatically when they are first needed. For that purpose distributions can register a back-end that resolves feature-descriptions to package-names.

Camera, PC hardware and software config:

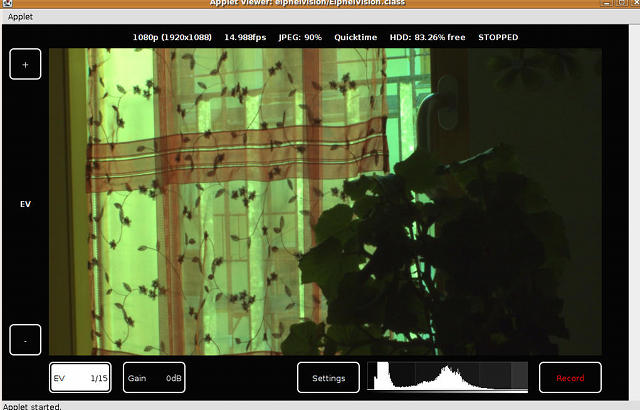

For the scope of this article and in order to simplify GST pipelines and software dependencies we chose to limit the camera resolution to 1920×1088 (FullHD), 25 FPS with auto-exposure at max. 39ms exposure time. The configuration was made once using camvc (standard camera control interface) and recorded as default configuration using parsedit.php

The hardware I used in my tests is my daily use notebook – MacBook Pro 3.1 running 64 bits version of Ubuntu 9.04 GNU/Linux, which is not a particularly powerful machine nowadays.

Most of the video software available in Ubuntu 9.04 is compatible with Elphel cameras “out of the box”, but limited to FullHD resolution. A few software modules and libraries need to be patched to be able to fully benefit from Elphel’s high resolution.

You can also install GStreamer from Launchpad GStreamer dev PPA.

For embedded devices and DSP related tests I used beagleboard running Ångström distribution connected to an Elphel camera via Ethernet network.

GST pipeline:

GStreamer is a library that can be included in your own software, but there is gst-launch program to allow quick & easy prototyping. As such, you certainly do not fully benefit from the flexibility of GStreamer, but it enables to prototype easily a video processing pipeline.

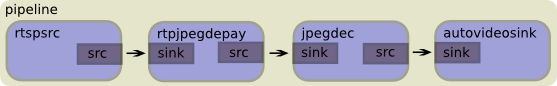

The most simple GST pipeline:

gst-launch rtspsrc location=rtsp://192.168.0.9:554 ! decodebin ! autovideosink

A more stable version, latency is set to 100ms and depay/decode operations are also better specified:

gst-launch rtspsrc location=rtsp://192.168.0.9:554 latency=100 ! rtpjpegdepay ! jpegdec ! autovideosink

Save the stream to an MJPEG/Matroska video file:

gst-launch -e rtspsrc location=rtsp://192.168.0.9:554 latency=100 ! rtpjpegdepay ! matroskamux ! filesink location=variable_fps_test.mkv

Matroska provides time-coding support and enables to record variable frame rate MJPEG video. Unfortunately only gstreamer was able to play such files. But of course we can re-encode to a 90 FPS video duplicating frames when necessary, to maintain the time-line.

“-e” option in gst-launch command line force EOS on sources before shutting the pipeline down. (Useful then you write to files and want to shut down by killing gst-launch with CTRL+C or with kill command)

gst-launch filesrc location=variable_fps_test.mkv ! decodebin ! videorate ! x264enc ! mp4mux ! filesink location=variable_fps_test.mp4

Transcode live stream to Dirac and record to a file. Careful: You need to have few pretty powerful CPUs.

gst-launch -e rtspsrc location=rtsp://192.168.0.9:554 latency=200 ! rtpjpegdepay ! jpegdec ! ffmpegcolorspace ! schroenc ! filesink location=”test.drc”

To read the recorded file:

gst-launch filesrc location=/tmp/test.drc ! schrodec ! glimagesink

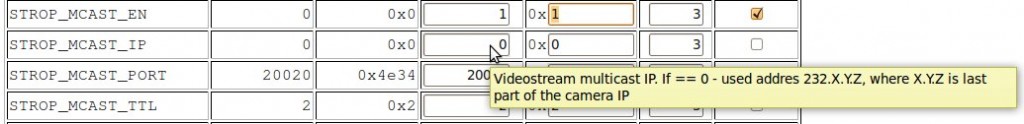

Unicast / Multicast:

Elphel camera streamer by default stream over unicast, but can easily be switched to multicast. In order to do so, open the camera web interface, choose “Parameter editor”, select “Streamer settings” and switch STROP_MCAST_EN from 0 to 1.

You can optionally set the multicast address.

Recent GStreamer version will automatically detect the type of the stream, but you can also specify the protocol manually:

gst-launch rtspsrc location=rtsp://192.168.0.9:554 protocols=0x00000002 ! decodebin ! autovideosink

You also need to have a route for the multicast address. If the camera is connected to your LAN and you have a default route on this Ethernet device you will automatically be routed to the multicast address. If your default route is on another network device such as wireless network card, or if you have a complex network setup you need to declare a static route to the multicast. Let’s say your wireless is wlan0 and your network connected to the camera is on eth0:

route add -net 232.0.0.0 netmask 255.0.0.0 dev eth0

JP4 custom image format plug-in:

JP4 is Elphel in-house developed image format.

The Elphel camera internal FPGA code uses a very simple algorithm to calculate YCbCr from the Bayer pixels. It just uses 3×3 pixel blocks. The other point is that this algorithm is time consuming and with the 5MPix sensor the debayering process became a bottleneck. So we have added a special JP4 mode that bypasses the demosaicing in the FPGA and provides an image with pixels rearranged in 16×16 macro-blocks to separate each bayer-color in an individual 8×8 sub-block, to save space this image is then encoded as monochrome.

You may find out more information about different JP4 modes in Andrey Filippov‘s last Linux for devices article.

Recently a small FLOSS company – UbiCast, specialized in intuitive and integrated solutions to create and share high value video content contributed to a JP4 debayer plug-in for GStreamer. This plug-in implements a de-block and several Debayer algorithms available from libdc1394. Currently only JP46 mode is supported, but the structure is in place to create support for other modes and to try different kinds of optimizations.

Realtime MJP4 video processing for low quality preview:

gst-launch-0.10 rtspsrc location=rtsp://192.168.0.9:554 ! rtpjpegdepay ! jpegdec ! queue ! jp462bayer ! queue ! bayer2rgb2 ! queue ! ffmpegcolorspace ! videorate ! “video/x-raw-yuv, format=(fourcc)I420, width=(int)1920, height=(int)1088, framerate=(fraction)25/1” ! autovideoesink

A “queue” creates a new thread so it can push buffers independently from the elements upstream.

JP4 live 1/8 resolution preview with low quality debayer while recording in JP4 for high quality post-processing.

gst-launch -e rtspsrc location=rtsp://192.168.0.9:554 latency=100 ! rtpjpegdepay ! tee name=tee1 tee1. ! queue ! matroskamux ! filesink location=jp46test.mkv tee1. ! queue ! jpegdec ! videoscale ! video/x-raw-yuv,width=384,height=218 ! queue ! jp462bayer ! queue ! bayer2rgb2 ! ffmpegcolorspace ! autovideosink

The de-block algorithm was later optimized by Konstantin Kim & Luc Deschenaux.

Embedded devices, DSP, OpenGL optimizations:

GStreamer is also available on embedded devices. For my tests, I used a beagle board running Ångström distribution. GStreamer on Android is also on the way.

Ångström distribution is an OpenEmbedded based GNU/Linux distribution that targets embedded devices and has support for GStreamer, OpenMax and TI DMAI plugins.

Ångström has NEON accelerated GStreamer FFMPEG and OpenMax IL modules (not using DSP) and a GStreamer Openmax plugin (using DSP).

GSTOpenMAX IL (Integration Layer) API can be used in the GNU/Linux GStreamer framework to enable access to multimedia components, including HW acceleration on platforms that provide it.

OpenMAX IL is an industry standard that provides an abstraction layer for computer graphics, video, and sound routines. This project is a collaboration between Nokia, NXP, Collabora, STMicroelectronics, Texas Instruments, and the open source community.

If you have an OMAP or DaVinci based board such as http://www.beagleboard.org/ , http://leopardboard.org/ , http://graphics.stanford.edu/projects/camera-2.0/, the upcoming Nokia N900 Internet tablet or the future Elphel NC373L you can use OpenMAX or TI DMAI plugins for GStreamer. https://gstreamer.ti.com/, http://wiki.davincidsp.com/index.php?title=GStreamer, http://wiki.davincidsp.com/index.php?title=GstTIPlugin_Elements.

Here is an example of TI based hardware mpeg4 encoding:

gst-launch rtspsrc location=rtsp://192.168.0.9:554 latency=100 ! rtpjpegdepay ! jpegdec ! TIVidenc codecName=mpeg4enc engineName=encode contiguousInputFrame=0 genTimeStamps=1 ! rtpmp4vpay ! udpsink port=5000

It is important to note that OMAP and DaVinvi also have OpenGL ES (Embedded Standard) support.

OpenGL & GLSL

You can implement algorithms on the GPU via OpenGL and GLSL, the OpenGL shader language. OpenGL and GLSL are not limited to nVidia GPUs (G80 and newer) like CUDA is. This helps to bring your optimizations to a wider range of GPUs from different manufacturers.

Dirac/Schrödinger for example have an OpenGL and GLSL optimization for GStreamer, the project is available on Dirac GSoC 2008.

GStreamer has a very powerful GL plug-in. It allows you to transfer data between CPU and GPU and has a plugin structure for writing custom shaders for GPU based processing. You can write your own plug-ins, examples are available on the gst-plugins-gl project.

Let’s play a bit with OpenGL filters:

gst-launch rtspsrc location=rtsp://192.168.0.9:554 latency=100 protocols=0x00000001 ! rtpjpegdepay ! jpegdec ! glupload ! gleffects effect=13 ! glimagesink

Here, we load the decoded stream into an OpenGL compatible graphic card and apply a GL filter.

A list of effects can be obtained with gst-inspect:

gst-inspect gleffects

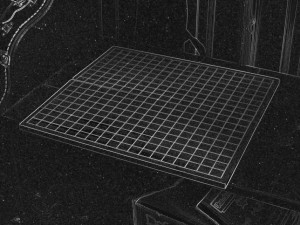

Sobel filter using OpenGL:

gst-launch filesrc location=pic1.jpg ! decodebin ! glupload ! glfiltersobel ! gldownload ! ffmpegcolorspace ! jpegenc ! filesink location=pic1_sobel.jpg

Kifu is a go game record generator in standard game format from a video stream, using image change detection and projective geometry. Part of the pipeline can be optimized on GPU. Here we demonstrate edge detection using Sobel filter on the GPU. For more information about Kifu project: http://wiki.elphel.com/index.php?title=Kifu:_Go_game_record_(kifu)_generator & http://sourceforge.net/projects/kifu/

Same thing, but on live video:

gst-launch rtspsrc location=rtsp://192.168.0.9:554 ! rtpjpegdepay ! jpegdec ! glupload ! glfiltersobel ! glimagesink

To see all OpenGL filters:

gst-inspect | grep opengl

While I was drafting this article, Luc Deschenaux wrote a new GST-GL plug-in allowing to load and apply a GLSL script. And here are few simple examples of the power of GLSL.

Perspective transform by Luc Deschenaux for KIFU :

gst-launch rtspsrc location=rtsp://192.168.0.9:554 ! rtpjpegdepay ! jpegdec ! glupload ! glshader location=perspectiveTransform.fs vars=”mat3x3 H=mat3x3(444.158095, 589.378823, 55.000000, -222.990849, 100.110763, 425.000000, 0.303285, -0.171750, 1.0);” ! glimagesink

GLSL de-block and debayer are on the way, and many different GLSL optimizations are possible to implement. Here is an example of GLSL de-block:

gst-launch rtspsrc location=rtsp://192.168.0.9:554 ! rtpjpegdepay ! jpegdec ! glupload ! glshader location=deblock.fs preset=deblock.init ! glimagesink

And here is the GLSL de-block code (deblock.fs):

#extension GL_ARB_texture_rectangle : enable

uniform sampler2DRect tex;

uniform float index[16];

void main() {

vec2 texcoord = gl_TexCoord[0].xy;

ivec2 blockpos = ivec2(texcoord) & ivec2(15,15);

vec2 offset = vec2(index[blockpos.x],index[blockpos.y]);

gl_FragColor = texture2DRect(tex, texcoord + offset );}

And the initialization GLSL code (deblock.init):

float index[16] = float[16](0.0, 7.0, -1.0, 6.0, -2.0, 5.0, -3.0, 4.0, -4.0, 3.0, -5.0, 2.0, -6.0, 1.0, -7.0, 0.0);

GST-OpenCV

OpenCV is a computer vision library originally developed by Intel. It is available for commercial and research use under the open source BSD license. The library is cross-platform. It focuses mainly on real-time image processing and can be optimized with Intel’s Integrated Performance Primitives, DSP, FPGA or GPU optimized routines to accelerate itself.

OpenCV’s application areas include:

- 2D and 3D feature toolkits

- Egomotion estimation

- Face Recognition

- Gesture Recognition

- Human-Computer Interface (HCI)

- Mobile robotics

- Motion Understanding

- Object Identification

- Segmentation and Recognition

- Stereopsis Stereo vision: depth perception from 2 cameras

- Structure from motion (SFM)

- Motion Tracking

Face detection and blurring example:

gst-launch rtspsrc location=rtsp://192.168.0.9:554 latency=200 ! rtpjpegdepay ! jpegdec ! videoscale ! video/x-raw-yuv,width=480,height=272 ! videorate ! capsfilter caps=”video/x-raw-yuv,width=480,height=272,framerate=(fraction)12/1″ ! queue ! ffmpegcolorspace ! faceblur ! autovideosink

To reduce CPU load we resize the FullHD stream to a smaller resolution, limit FPS to 12 and process it with “faceblur” from the GST-OpenCV plugin.

Two GL outputs, one FullHD output, one 1/5 resolution with face detection (gst-opencv) output:

gst-launch rtspsrc location=rtsp://192.168.0.9:554 latency=100 protocols=0x00000001 ! rtpjpegdepay ! jpegdec ! tee name=tee1 tee1. ! queue ! glimagesink tee1. ! queue ! videoscale ! video/x-raw-yuv,width=384,height=218 ! ffmpegcolorspace ! facedetect ! glimagesink tee1.

GL output a FullHD stream with 1/5 resolution picture-in-picture with face detection (gst-opencv):

gst-launch videomixer name=mix ! ffmpegcolorspace ! ximagesink rtspsrc location=rtsp://192.168.0.9:554 latency=100 protocols=0x00000001 ! queue ! rtpjpegdepay ! jpegdec ! videorate ! capsfilter caps=”video/x-raw-yuv,width=1920,height=1088,framerate=(fraction)25/1″ ! videoscale ! capsfilter caps=”video/x-raw-yuv,width=900,height=506,framerate=(fraction)25/1″ ! tee name=tee1 pull-mode=1 tee1. ! queue ! videoscale ! video/x-raw-yuv,width=320,height=176,framerate=25/1 ! ffmpegcolorspace ! queue ! facedetect ! queue ! ffmpegcolorspace ! videobox border-alpha=0 alpha=0.5 top=-330 left=-580 ! mix. tee1. ! queue ! ffmpegcolorspace ! mix.

Here is a list of plug-ins currently available with gst-opencv: edgedetect, faceblur, facedetect, pyramidsegment, templatematch. It is easy to implement any OpenCV function as gst plugin using the existing modules as reference.

For more info about OpenCV please visit http://opencvlibrary.sourceforge.net/

Implementing or porting a custom plugin to GStreamer:

First of all fdsrc and fdsink can be used for easy prototyping. You can connect a GST pipeline to read & write data from/to a unix file descriptor.

echo “Hello GStreamer” | gst-launch -v fdsrc ! fakesink dump=true

Appsrc & appsink elements can be used by applications to insert and retrive data into a GStreamer pipeline . Unlike most GStreamer elements, appsrc & appsink provide external API functions.

A documentation is available on GStreamer web site under how to: Building a Plugin.

You can also use examples from gst-plugins-gl, gst-openmax and gst-opencv projects to build custom processing plug-ins.

Many projects such as GstAVSynth (AviSynth plug-in wrapper for GStreamer) are currently porting plug-ins from other software to GStreamer.

GStreamer plug-ins are implemented as shared libraries, so it’s easy to distribute them in a binary form without requiring the user to recompile anything.

Elphel PHP API:

Elphel 353 cameras series have a very powerful PHP API that allow to create custom camera control applications in PHP.

This API enables to take into account latency/pipe-lining in the camera and make it possible to use HDR tricks and other time-critical things.

Here is a very simple PHP code to read some of the sensor parameters:

<?php

// display current camera exposure time in (µs / 1000 = ms);

echo “<b>Exposure Time: </b>”.elphel_get_P_value(ELPHEL_EXPOS)/1000 .” ms<br/>”;

// display current camera window of interest width (in pixels);

echo “<b>Image Width: </b>”.elphel_get_P_value(ELPHEL_WOI_WIDTH).”<br/>”;

// display current camera window of interest height (in pixels);

echo “<b>Image Width: </b>”.elphel_get_P_value(ELPHEL_WOI_HEIGHT).”<br/>”;

?>

setparam.php is a simple example to set sensor parameters from a PHP script.

snapfull.php is a more complex example. It takes a full-frame snapshot and returns to previous resolution with minimal delay.

More details on how to use PHP in Elphel cameras can be found on Elphel wiki.

ElphelVision software from Apertus Open Source Cinema project demonstrates a nice integration with Elphel. Developed as Java Applet using MPlayer to display the live video stream ElphelVision communicates with custom PHP scripts running on the camera to set and read sensor/camera parameters.

See also: PHP Examples, Elphel Software Kit, KDevelop, Str

Python and gstmanager integration:

Python is an Open Source programming language, it is highly portable, has binding for GStreamer and many other frameworks.

Gstmanager is based on the gstreamer python bindings, gstmanager offers the following helpers through the PipelineManager class:

- launch from pipeline description string (example)

- states wrapping

- position/seeking wrapping

- manual EOS emission

- caps parsing

- element message proxy (to very easy to use event system) (example)

- negotiated caps reporting (example)

The code with examples is available on http://code.google.com/p/gstmanager/source/browse/trunk/

Conclusion:

Using the described pieces of free software you can easily design an application with custom fine tuned camera controls, software or hardware accelerated image processing, streaming and recording.

Many optimizations are still available for feather possible implementations using general purpose CPU, DSP, FPGA and GPU.

Interesting links:

High-quality Malvar-He-Cutler Bayer demosaicing filter using GPU in OpenGL: http://graphics.cs.williams.edu/papers/BayerJGT09/

A nice collection of high-resolution image processing tool http://elynxlab.free.fr/en/index.html

Central resource for General-Purpose computation on Graphics Processing Units: http://gpgpu.org/

Graphic environment to help the education, training, implementation and management of vision systems (based on OpenCV): http://s2i.das.ufsc.br/harpia/en/home.html

I’d like to thank especially:

Folks from #gstreamer channel who helped a lot during my gstreamer tests.

Ross Finlayson from http://live555.com/ and the MPlayer team who supported Elphel since the first days. (Elphel NC313L model)

Michael Niedermayer who patched libswscale to support Elphel’s high resolution video. Now MPlayer, VLC, Blender, GStreamer and other software built on top of FFMPEG can be used to rescale high resolution videos (up to 5012×5012).

Konstantin Kim for the first de-block/debayer implementation in MPlayer and AviSynth, for his matlab scripts and for the support he offered me while I was writing this paper.

UbiCast company and especially Florent Thiery and Anthony Violo for their contribution to the JP4 support for GStreamer and for their gstmanager.

Sebastian Pichelhofer and other folks from Apertus Open Source Cinema project for PHP API examples.

Luc Deschenaux for introducing me to GStreamer; for the rtspsrc, rtpjpegdepay and gst-plugins-gl patches; the emerging glshader plugin and GLSL scripts.. And all the good rum (which is to blame if I forgot to mention something…)

gst-launch rtspsrc location=rtsp://192.168.0.9:554 ! rtpjpegdepay ! jpegdec ! glupload ! glfilterglsl location=perspectiveTransform.glsl coordstype=1 ! glimagesink

Is this some kind of offline processing of the image?

Why offline? GStreamer can be used for live or post processing.

Hi,

I found really interesting this post, because I’m creating an app that use opencv and elphel 353 camera

Unfortunately to decode the rtsp stream, I need to get it from camera with mencoder and AVLD (videoloopback) but these are not very stable. I wonder what is the best way to connect to the camera to get its frames for image processing (opencv)?

Esteban, do you need just individual frames for processing? Then you do not need the streamer, you can get them from the image server (imgsrv) running in the camera on port 8081, it has embedded help (displayed when you just open the http://capera_ip:8081)

Andrey

Esteban,

AVLD and different videoloopback devices are good for prototyping or for using with proprietary software where you can not implement RTSP support. OpenCV can be now compiled with FFMPEG or GSTreamer. Both should support RTSP video. I have never tried it yet. (You need CVS version of OpenCV)

Regards,

Alexandre

appsink is another option. Not very flexible, but may do the thing for your app.

Alexandre

Hi, I work with svn OpenCV 2.1 version and ffmpeg support, but when I call a function to open a capture from the elphel 353 camera, It says me:

[rtsp @ 0xa65d420]MAX_READ_SIZE:5000000 reached

[rtsp @ 0xa65d420]Estimating duration from bitrate, this may be inaccurate

Input #0, rtsp, from ‘rtsp://192.168.1.200:554’:

Duration: N/A, bitrate: N/A

Stream #0.0: Video: mjpeg, 90k tbr, 90k tbn, 90k tbc

swScaler: Unknown format is not supported as input pixel format

Cannot get resampling context

Unfortunately with another generic axis ip cam, it works. Finally, I have not tried gstreamer+opencv yet.

br

Esteban

[rtsp @ 0xa65d420]MAX_READ_SIZE:5000000 reached

– try to reduce the resolution. You have a limitation on RTP max packet size. You can slo recompile changing this value.

Alexandre

Hi, I tried setting -probesize option in ffmpeg with a high value but either I have the same results :S

libavutil 50.14. 0 / 50.14. 0

libavcodec 52.66. 0 / 52.66. 0

libavformat 52.61. 0 / 52.61. 0

libavdevice 52. 2. 0 / 52. 2. 0

libswscale 0.10. 0 / 0.10. 0

libpostproc 51. 2. 0 / 51. 2. 0

[rtsp @ 0xa28f420]MAX_READ_SIZE:500000000 reached

[rtsp @ 0xa28f420]Estimating duration from bitrate, this may be inaccurate

Input #0, rtsp, from ‘rtsp://192.168.1.200’:

Duration: N/A, bitrate: N/A

Stream #0.0: Video: mjpeg, 90k tbr, 90k tbn, 90k tbc

File ‘2.mov’ already exists. Overwrite ? [y/N] y

swScaler: Unknown format is not supported as input pixel format

Cannot get resampling context

I think that is a problem with the pixel format of the camera that I cant figure out yet

Hi Alexandre,

Very interesting article – thanks. The GlShader plugin seems a very useful addition to GStreamer, and it’s something that would help with an optical correlation project I’m working on.

I’ve been looking through the current GStreamer codebase and Luc’s plugin doesn’t seem to have made it in there yet.

Could you point me in the right direction to obtain this please?

Thanks!

Alex (ajlennon at dynamicdevices.co.uk)

Hi Alex!

The patch is not yet included 🙁 it is available here:

https://bugzilla.gnome.org/show_bug.cgi?id=600195

Please, do not hesitate to test it, contribute and push for inclusion into GStreamer.

Regards,

Alexandre