IMU and GPS Integration with Elphel Cameras

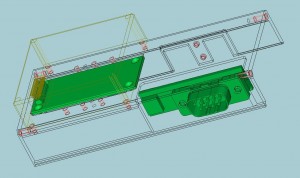

CAD rendering of the Analog Devices ADIS-16375 Inertial Sensor, 103695 interface board and 103696 serial GPS adapter board attached to the top cover of NC353L camera

For almost 3 years we had a possibility to geo-tag the images and video using external GPS and optional accelerometer/compass module that can be mounted inside the camera. Information from the both sensors is included in the Exif headers of the images and video frames. The raw magnetometer and accelerometer data stored at the image frame rate has limited value, it needs to be sampled and processed at high rate to be useful for the orientation tracking , and for tracking position it has to be combined with the GPS measurements.

We had developed the software to receive positional data from either Garmin GPS18x (that can be attached directly to the USB port of the camera) or a standard NMEA 0183 compatible device using USB-to-serial adapter. In the last case it may need a separate power supply or a special (or modified) USB adapter that can provide power to the GPS unit from the USB bus.

When working on the Eyesis panoramic camera we realized that we need higher precision position and orientation, for the GPS we need more than one sample per second, and we also looked for the synchronization of the measurements to the timing signal available on many units, some of our users were also asking for that option.

We started looking for the turn-key solution – device that has the real-time processing capabilities of the inertial data, combines it with that from the attached GPS unit, in some cases has manometer for the atmospheric pressure measurement (and so the altitude) and an input for the odometer pulses from the wheel sensor on the vehicle. Such devices significantly simplify the processing on our side, but internally they run closed, proprietary software so we might have troubles in the future trying to adjust the control parameters to the particular operation conditions or to combine the data being used by such units with the additional measurements specific to our case. At the same time I realized that we do not need the position/orientation data in the real time, we can just record it while acquiring images and then later process the measurements when processing the images themselves – I wrote about it in the earlier post. This approach gives more flexibility for the sensor selection, we decided to start with the high resolution 6-DOF sensor manufactured by Analog Devices – it has 16-bit resolution of the internal ADC, 330Hz of the sensor bandwidth and 2.4kHz sample rate. The IMU adapter board is designed to support different sensors, ones with different power supply voltage and different SPI clock rate i.e. ADIS-16405 that has lower resolution than ADIS-16375, but includes magnetometers.

Sampling multiple registers at 2.4kHz requires recording of some 100 kilobytes per second, the data has to be buffered by hardware so the camera CPU would not have to service low-latency interrupts – the CPU already has to provide various functionality related to the image capturing and recording, control of the acquisition modes, it has to communicate with the host computer and/or with the other cameras. Those requirements were suggesting to use some of the remaining camera FPGA resources – unlike software implementation such approach causes no interference with the existent functionality ( until all the FPGA logic cells, registers and memory are exhausted, of course). Logged data is buffered in the FPGA on-chip FIFO memory and then transferred to the system memory using the DMA method . When the 353 camera was designed I connected two external DMA channel signals from the FPGA to the CPU, but so far only one channel was used, so that was an opportunity to put the second dormant one to do some useful work.

Cameras use 64-bit timestamps internally (32-bit seconds from the epoch and microseconds), these timestamps are attached to each logged sample, the sample size is fixed to 64 bytes including 8 for the timestamp and 56 for the payload, that allows to record up to 28 of the IMU internal 16-bit registers or 14 of the 32-bit ones (the set of the sampled registers is run-time programmable). When the IMU logger Verilog code was written, simulated and tested in the camera, it was very tempting for me to add logging of additional data, not just the IMU. And when making the GPS interface board (basically USB-to-Serial adapter with GPS power supply provisions and sync pulse input connected to the FPGA GPIO) I already connected the RxD line to another FPGA GPIO line – two such lines are available on each extension port of the 10369 board. That gave the FPGA capability to listen to the GPS data while the full duplex communication between the GPS and the CPU is preserved.

Unfortunately some of the NMEA 0183 sentences may be longer than the 56-byte payload of the logger (the GPS data rate is much lower than that of the IMU so I tried to reuse the logger format optimized for the IMU), so I added some minor processing of the NMEA sentences during logging (for what I was criticized on #elphel IRC channel ). So current code can recognize (according to run-time specified pattern) up to four different $GPxx sentences and extract data according to per-sentence provided format: each field can be either

- a string of the 7-bit characters (last byte is stored OR-ed with 0x80) – such fields are usually a single-character ones like “E” for East or

- a number, that may include decimal point and “-” sign. Such data is encoded four bits per character (so two characters per byte) with 0xf added for the field termination.

Such compressed format allows to log any sentence, the full sample includes 8 bytes of the timestamp (the normally unused high microsecond byte is now used to store the logged channel number), followed by the half-byte of the sentence number (one of four) followed by the actual sentence data – up to 55.5 bytes that corresponds to about 100 characters of the raw NMEA.

Additional configuration parameters select the source of the synchronization – the moment when the timestamp is sampled. It can be the GPS sync output (if available), the start of the first character in the serial data after the pause or the start of each individual NMEA sentence.

Third source of the logged data is the inter-camera synchronization module. In the multi-camera systems (like Eyesis) there is a hardware synchronization between individual camera modules, the “master” camera sends a trigger signal simultaneously to each of the “slave” ones, for simplicity of the overall synchronization process (for each camera to determine which frame number this particular synchronization pulse match to) the synchronization signal encodes the full 64-bit timestamp of the “master” camera, this timestamp is normally recorded with the image instead of the local timestamp. So the logger is capable of recording a pair of the timestamps for each image acquired – the “master” timestamp (stored in each camera image) and the local one. That provides means to match IMU/GPS data to the “master” clock time.

Last (at least for now) source of the logged data is “odometer” channel (or it can be something else, of course). The external sync port of the NC353 camera has an output that can be used as a power source for an LED and an input that can be connected to a photodiode. These signals can be used for the reflective sensor of the wheel rotation, providing the system with odometer data. It is possible to write the additional message text (up to 56 bytes) by the software (i.e. received over the network) to the FPGA internal memory – this data will be logged at the next pulse. Odometer pulses can use the software input – in most cases couple millisecond jitter that exists for the network synchronization is not critical in this case, so the channel can log pure network messages.

The software driver configures the default logger parameters and the associated DMA channel, provides the means to modify the settings and reads the DMA memory buffer when the data is available, so a just

cat /dev/imu >output_file

is all what is needed to record the data from the IMU, GPS (with optional time sync output), odometer and inter-camera synchronization module to the storage file.

This functionality will be a part of the Eyesis-4pi system, we also plan to use it with the NC353 cameras. The initial revision of the assembled boards is tested and we placed an order for the final ones with some minor bug fixes, we ordered the modified top cover for the camera with extra recesses and holes to accommodate this new hardware (see the CAD rendered image above). We plan to post the raw logged data with the Eysis images in the next couple weeks.

Leave a Reply