CVPR 2018 – from Elphel’s perspective

In this blog article we will recall the most interesting results of Elphel participation at CVPR 2018 Expo, the conversations we had with visitor’s at the booth, FAQs as well as unusual questions, and what we learned from it. In addition we will explain our current state of development as well as our near and far goals, and how the exhibition helps to achieve them.

The Expo lasted from June 19-21, and each day had it’s own focus and results, so this article is organized chronologically.

Day One: The best show ever!

While we are standing nervously at our booth, thinking: “Is there going to be any interest? Will people come, will they ask questions?”, the first poster session starts and a wave of visitors floods the exhibition floor. Our first guest at the booth spends 30 minutes, knowledgeably inquiring about Elphel’s long-range 3D technology and leaves his business card, saying that he is very impressed. This was a good start of a very busy day full of technical discussions. CVPR is the first exhibition we have participated in where we did not have any problems explaining our projects.

The most common questions that were asked:

Q: Why does your camera have 4 lenses?

A: With 4 lenses we have 4 stereo-pairs: horizontal stereo-pair is responsible for vertical features (it is the same as in regular stereo-camera); the vertical pair detects horizontal features, while the 2 diagonal pairs ensure that almost any edge is detected. (Elphel Presentation, p.8).

Q: Why don’t you use lidars?

A: Lidars are not capable of long range distance measurements. The practical maximum range of a lidar is 150-200 meters, while we target 500-2000 meters with a 150 mm stereo-base.(Elphel Presentation, p.7)

Q: How do you deal with thermal expansion?

A: there are 2 parts to this question: 1) thermal expansion of the calibrated lens is taken care of by the design of the sensor-lens module; 2) Thermal expansion of the whole camera is also an issue, especially with the larger stereo-base. The titanium tubes that form the cross of the X-Camera expand more on the “sunny side”, and the whole camera bends inward, like a flower. When taking images under the hot Utah sun, we had to wrap the tubes with insulation, covered in aluminum foil.

After some consideration we decided to remove the insulation wrapping for the CVPR show, because it makes the camera more presentable, and were surprised that this question came up so many times – we almost put the insulation cover back on.

Q: Do you build custom lenses?

A: We pre-select standard M12 lenses, because the quality of the lens can vary even within the same model. We have developed a full calibration process and post-processing software to compensate for optical aberrations, allowing to preserve the full sensor resolution over the camera FOV. Read more about aberration correction on Elphel blog.

Q: What is your part of the technology?

A: Everything: we have all parts: Hardware – cameras with aberration corrected lenses and thermally compensated sensor-lens module; a state of the art calibration facility and process; 3D-reconstruction software (Free Software, GNU GPL).

Q: What is the accuracy of your photogrammetry:

A: We comfortably work with 1/10 of a pixel.

Q: How dense is your 3D scene?

A: Currently: 324 x 242 overlapping tiles, with no dead zones.

Q: Does it build a 3D model in real-time?

A: Currently the 3D model is reconstructed in software in the post-processing of the acquired images. This software is tested for FPGA, with which we can achieve 12fps 3D-reconstruction on the camera. Our final goal is to design and manufacture custom ASIC with 3D processing on the chip. (Elphel Presentation, p.17)

The last visitor of the day from a large European company stopped at our booth around 6pm, 30 minutes before the end for the day, and we spend another hour talking and showing Elphel’s camera and high-resolution accurate images it produces. The lights on the floor went down, but we stayed and explain the technology behind our results and future plans. It was a nice day, well spent.

Day 2: Neural network focus.

Our current results:

- 500 meters, high resolution, 3D reconstruction with 5% accuracy with 150mm stereo-base (quad-stereo camera), passive (image-based)

- 2000 meters with 5% accuracy with 2 quad-stereo cameras based at 1256 mm from each other

- 3D-Reconstruction is done in post-processing with software simulated for FPGA porting. With FPGA – 12fps in 3D

Our near future goals:

- Train the neural network with the current 3D-image sets to achieve same results as in (2) – 2000 meter 3D reconstruction, with just one quad-sensor camera

- Port software to FPGA to achieve real-time 3D reconstruction (12 fps)

Our long-term goals:

- Manufacture custom ASIC for 3D-reconstruction to reconstruct 3D scenes on the fly with video frame rate. The small size of the custom chip will allow us to mass produce a long-range 3D reconstruction camera for industrial and commercial purposes. Smaller form-factor, inexpensive, and fast.

- Scalable design: a smaller stereo-base with 4 sensors – phone application (200 meter accurate 3D reconstruction for consumer applications); larger stereo-base: extremely long range passive 3D reconstruction – military applications, drone applications, etc.

CNNs are not efficient for the real time high-resolution 3-D image processing.

Elphel’s approach to 3D reconstruction with the help of neural network is different from the mainstream one. This is partially due to our expertise is building calibrated hardware, which already produces robust 3D measurements. Therefore we see the value in pre-processing the images before feeding them to the NN similar to the eye pre-procesing visual information before sending it to the brain. The human eye receives 150 MPix of data, processes it on the retina, and sends only 1Mpix to the brain – 150 times reduction.

One question came up twice, both times from the conference attendees, about the difficulty the NN has to process high-resolution images. The current approach to NN training recommends to use low resolution raw images (640×480), because the NN has to be trained on a large amount of images (thousands). The last 6 slides of Elphel’s presentation talk about augmenting the NN with Elphel high-performance Tile Processor, that significantly reduces the amount of data, while leaving the “decision making” to the network.

We argue that the End-to-End approach is less efficient for high-resolution image processing then NN augmented with training-tree, linear image processing.

Elphel training sets for Neural Network:

Elphel offers unique image sets for neural network training. These are high-resolution, space-invariant data sets.

Elphel is seeking collaboration with machine learning research teams working in the area of ML applications for 3D object classification, localization and tracking.

Day 3: Autonomous Vehicle Focus:

The exhibition is slowing down. Some booths start packing at midday. Elphel, however is busier than ever.

We have talked to about every self-driving car company that had it’s presence at CVPR. We also talked to other large corporations doing research in perception and 3D-reconstruction.

We start by visiting our neighbors – other exhibitors at the Expo, we are very curious what they develop, for which applications, can it be combined with our technology, can we learn something from them, and can they learn from us? It’s a collaborative spirit, with which we start conversation. One of our closest neighbors is Mapbox – an open source mapping platform for custom designed maps, so we start with them. We use Open street Map as well as other maps with our Scene Viewer for 3D-reconstructed scenes for locating scenes on the map as well as for the ground truth data. During lunch break at we add Mapbox tiles to our scene viewer. Mapbox exhibitors are pleased that it worked so well for us. Now they are also very interested in our technology!

Then we walk to other booths, learn about them and present Elphel.

Some of the responses we’ve got when we introduced our technology and our current results:

- Researcher from Apple: “You have the most unique technology on this Expo!”

- Autonomous Vehicle company A: “I can not believe you can accurately measure distances at 500 meters with just a small base of 150mm? With just 5-10% error? It is simply impossible! I have to come see it!” After visiting our booth seeing the demo he asks: “Can you demonstrate your technology on the car? It has to be in Singapore!”

- Autonomous Vehicle company B: ” We are happy with the use of the Lidars on the city streets, with close range perception and 30 mph driving speed. But on the highway, where the speed if much higher and the distance much farther the Lidar’s output is too sparse. Passive, long-range 3D reconstruction would be a perfect solution.”

- Autonomous Vehicle company C: “How dense is your 3D scene? Oh, it is dense, then we can use it.”

- Autonomous Vehicle company D: “We are building the perception solution for self-driving trucks. We need long-range distance measurement.”

- Autonomous Vehicle company E: “We have 16 different types of sensors on the car right now. I don’t think we have room to put another camera. Besides the area behind the wind shield is very valuable, we already have 4 cameras there – there is no room. By the way, we re-evaluate our approach every 6 months, and we just did that, so let’s, maybe, talk in 6 months again?”

Interesting thought – why would anyone need unobstructed windshield in a self-driving car? They did not explain that.

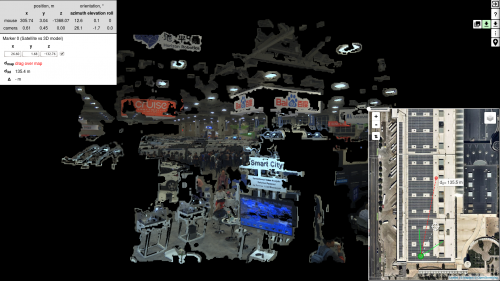

View of the CVPR 2018 Expo “From Elphel Perspective”:

One last thing to do before the show is over is to get the 3D-X-Cam up high and build the 3D model of the CVPR 2018 EXPO in “real-time”!

Leave a Reply