SCINI Takes Elphel Under Antarctic Ice

The Submersible Capable of under-Ice Navigation and Imaging (SCINI) is an underwater remotely operated vehicle (ROV) designed to facilitate oceanographic science research in extreme polar environments. The project was part of a three year grant, starting in 2007, from the National Science Foundation aiming to develop a cost-effective research tool that can be easily deployed through a 20cm hole in the ice. Over the past three austral summers, field teams led by benthic ecologist Dr. Stacy Kim and SCINI inventor Bob Zook, took the ROV into the cold southern waters surrounding McMurdo Station, Antarctica to capture video from parts of the natural world that have never been seen before.

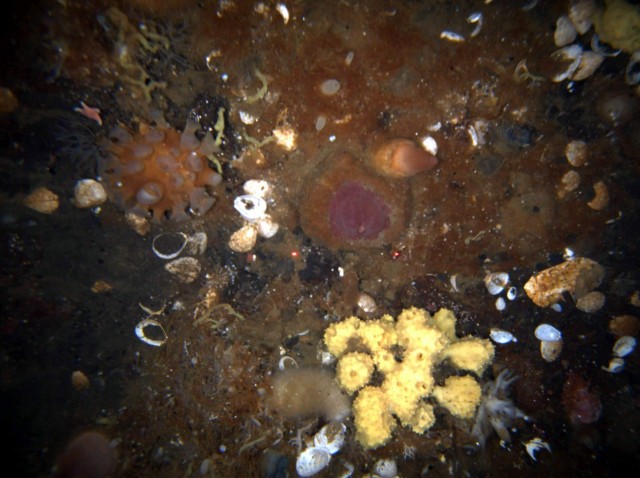

Imagery taken by ROV’s such as SCINI are very exciting to oceanographic researchers today. The Antarctic sea floor below safe diving depths (deeper than 40m) has been studied very little. One of the reasons for this is the difficulty and cost associated with surveying multiple sea floor areas located beneath several meters of ice. Most ROVs have a wider diameter than SCINI, which quickly boosts the amount of logistic support and energy required create a large enough hole for deployment. In contrast, drilling a hole big enough to deploy SCINI is accomplished easily using hand-held Jiffy ice drills, allowing research teams to survey many areas during their short field season.

We chose the Elphel 353 and 5MP sensors for SCINI during the early design phases. After evaluating several other platforms (mostly CCTV-type cameras due to their relatively low cost), it quickly became apparent that the Elphel’s openness, large feature set, excellent support team, and extreme flexibility made it an ideal choice for a field research tool.

Underwater Science With Elphel

Conducting science in the field with SCINI and the Elphel cameras is an amazing experience. Once our team leaders have picked a general area, we have to identify potential dive sites where we can reach the sea floor (max depth around 300m), and we need to be able to drill at least two but preferably three holes in a triangular pattern with dimensions corresponding to the ocean depth to deploy both SCINI and our acoustic positioning transducers. In some places, such as on the sea ice, this is as simple as measuring the distance and drilling because the ice is flat for miles and we know that it is less than 8m thick — we only have so many flights for our drill. In other locations, such as atop the Ross Ice Shelf, the ice will be much, much thicker and riddled with a maze of dirt and ice mounds. In this case, we will usually try to identify and profile a crack from the air and on foot where we can sneak through the much thicker surrounding ice shelf. Our visits to Bratina Island and Becker Point exemplified both of these conditions.

Next, we transport nearly 400kg of equipment to the dive site by helicopter, PistenBully, snowmobile, or “man-hauling” — pulling the sled with a line — and then begin preparing for the dive. In addition to drilling the holes, we add floats to the long tether so that both it and SCINI will be neutrally buoyant, and setup the control room. If we have driven from McMurdo to our site for the day, we will typically locate our control room in the back of the PistenBully. For more remote locations that require helicopter support, we setup the control room inside a Kiva tent, as shown below. The second picture here shows the inside of the tent.

Once the control room is setup, we place SCINI into the hole, check her equipment, and dive down to the sea floor. While underwater, the Elphel cameras become the primary tool for both piloting and gathering data to support our research goals.

Several research objectives were identified for SCINI’s initial three-year grant:

- Locate “lost” experiments from the 1960s that are now inaccessible to divers (see http://scini.mlml.calstate.edu/SCINI_2007/historical.html for more information).

- Survey new benthic communities around McMurdo Sound.

- Create sea floor image mosaics.

- Engineer and prove the viability of the SCINI platform for research!

Video Configuration

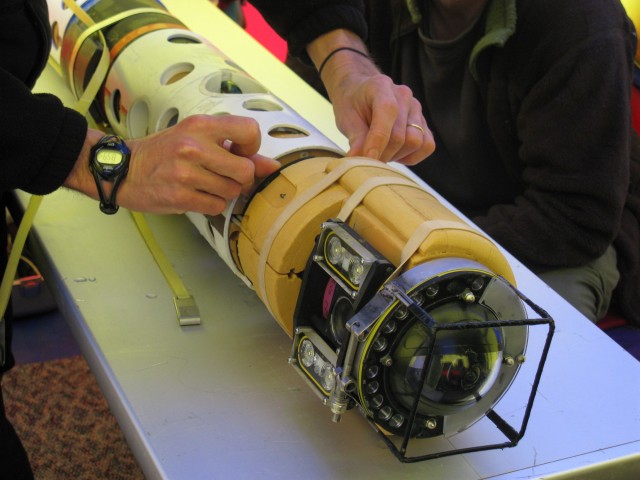

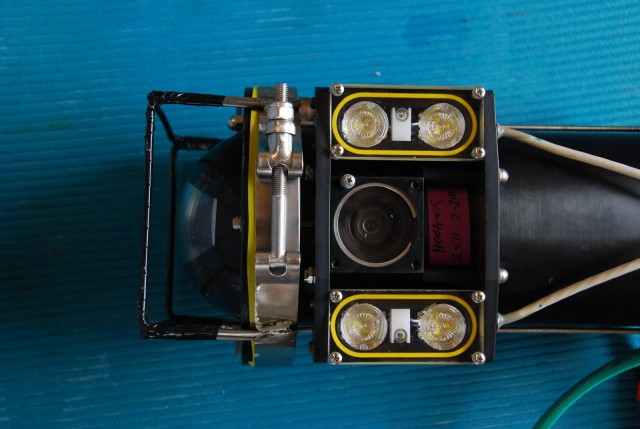

The 2009 field season was the first time we deployed SCINI with both a forward-facing and downward-facing camera. During the first two seasons, all piloting and scientific imagery were captured using only a forward-facing camera with a fisheye lens. This presented some challenges while diving, lens distortion nowithstanding. First, image needs of the pilot and researcher may be different. The pilot usually only requires enough detail to see where they are going, more light as they are generally peering into dark water, low latency, and a high frame rate. The researcher needs high quality sea floor images with no motion blur (fast exposure time) while being able to tolerate a little more latency and a slower frame rate. Second, with only a single camera and a benthic ecologist on board, retrieving high quality stills from the sea floor might require the pilot to position SCINI at steep angles to view areas of interest, and then straightening out again to continue exploring the survey area. Since we obviously could not always drive SCINI while tilted, we needed a new solution. Below, you can see the new housing that supports a second downward-facing Elphel 353 with a narrower lens.

SCINI’s lights are PWM-controlled Luxeon Rebel cool-white 3W LEDs that produce about 70 lumens per watt. The forward-facing ring is composed of 21 units while the downward-facing fixture is composed of 4 3-LED stars. This provides decent lighting for the pilot and plenty of light for good photos a meter or two above the sea floor. We also tested the summation mode binning feature of the Aptina 5MP sensors this last season as we usually run the forward camera at 1/4 or 1/2 binning. The default sensor mode averages the binned pixel values in the columns, while in summation mode, the binned pixel values are additive, which provides a noticeable brightening of images. In practice though, the difference didn’t improve our ability to pilot enough to decide to leave it enabled. Our downward camera operates at full resolution all the time, so this feature wouldn’t apply there.

We experienced a couple minor issues with image acquisition during dives, but the flexibility of the Elphel platform made these issues very easy to troubleshoot. During one of our deep dives we noticed some odd dulling of color when the image window of interest (WOI) passed over brightly-colored sea life. The creatures were lightly scattered amongst a sparsely populated and generally dull-colored sea bed. We didn’t want this to happen while gathering data, but we were out in the field with no Internet access and I didn’t see anything obvious in the web GUI that might be causing the issue. Being a *nix guy, the embedded Linux OS and shell came in handy. It only took a couple seconds to see the “autoexposure” process running, which I promptly killed. Problem solved.

Another small issue we encountered was a blue tint that would infrequently appear on our images. Andrey quickly helped us figure out that it was related to our 3-4″ sensor cables and gave us a few solutions that we will test this summer.

All data to and from the submersible is modulated on our high-voltage AC power conductors using Homeplug AV-based ethernet bridge adapters. The use of powerline technologies allow us to keep tether weight and costs to a minimum since we don’t require dedicated data wires. We removed the housing from Linksys PLE-300 and Belkin 3-port units to fit them inside the electronics and camera bottles. They provide 100Mb Ethernet connections to our Lantronix microcontroller interfaces and Elphels. Vicor power modules and an AC line filter keep the power clean enough to give us up to 60Mbps UDP throughput over 300m of tether. Again, thanks to the Linux shell on the Elphels, testing and monitoring our maximum throughput performance was as simple as issuing

dd if=/dev/zero bs=1400 count=100000 | nc -u <surface_IP> -p 1234

from the camera, and then viewing the throughput in Wireshark.

Custom Elphel Interface

Our primary surface tool for retrieving and storing Elphel video imagery is called Image Annotation Tool, or IAT. This custom application was initially built by Desert Star Systems (the company who also builds our SBL underwater positioning system) on Windows using C#.NET and has been incorporated into the SCINI deployment kit since 2008. It provides a user-friendly interface and a simple set of camera controls:

- Exposure for streaming video and full-resolution stills

- Decimation/resolution and JPEG quality

- Take a full resolution snapshot (using snapfull.php!) by hitting <CTRL>

- Store image annotations in the log file and in the image IPTC header

- Keep a running log of image and sensor data

We send custom URLs to camvc.php to manipulate our sensor and FPGA parameters. This control interface was recently updated to work with camvc.php for firmware version 8.0+. During this update process, we noticed 70-80ms of latency was generated every time we changed settings. At first we thought it might have something to do with the process of changing hardware settings via I2C (this was our first time using the streamlined 8.0 firmware). We quickly learned that loading and parsing the large camvc.php file was responsible for all the processing time. Fortunately, the great Elphel folks (thanks, Sebastian!) already had a small script called setparam.php that was only a few lines of code and executed very quickly.

We use imgsrv to retrieve video via individual frames with a simple ‘GET /img’ request. Using this interface was especially easy to add into our application and working with individually timestamped JPEG files has been a mostly convenient way to retrieve and view the data later.

The video feed is displayed in the main viewing window shown above as well as a slave display on an external monitor. Two copies of this application were run for each mission during SCINI’s 2009 field season. One copy of IAT, controlled by the scientist, captured images from the downward-facing camera while the other copy, controlled by the pilot, captured images from the forward-facing camera.

Future

SCINI has a promising future ahead. Both Bob and Stacy have projects in the works to return to the Anarctic for the 2010 field season. Bob will be working with Andrill to develop “SCINI Deep,” capable of reaching 1.5km depth and supporting their deep drilling operations. Stacy will be returning to McMurdo with fellow scientists Paul Dayton and John Oliver to resample the “lost experiments” and continue researching decadal ecological shifts in benthic communities. All of this new research means new technology for SCINI as well. To go deeper than 300m, several changes must be made to the housings and foam currently in use. We will also have to investigate the type of conductors in the tether (twisted pair, coaxial, fiber optic) and the data bridges to maintain our high speed connection. We have been unable to use current generations of Homeplug AV adapters to pass data over the existing tether further than about 450m. Devices based on the forthcoming IEEE P1901 standard are slowly becoming available and may help us in this endeavor. The long link from surface to sea floor will likely utilize an active clump weight, which must also be developed. Stacy’s work will require the addition of a gripper arm. Other possibilities for SCINI’s hardware are the addition of a small multibeam sonar device to create new bathymetric maps around McMurdo Sound.

We are also looking into new features for IAT. We are interested in adding an RTSP streaming mode with Gstreamer or VLC and support for the JP46 raw mode. I was inspired by this post as we have had difficulty in the past using .NET’s built-in libraries for useful, real-time image processing. And, we are considering logging much of our sensor data in the UserComment EXIF tag on each image. .NET’s built-in support for modifying these fields in real-time and non-destructively (w/o recompressing each image) should be possible as long as the tag already exists. I’m glad this is so easy to do in the camera. Needless to say, storing scientific data this way is very non-standard, but it is available to us now. In the farther future, we might look at storing this data as XML-formatted netCDF in XMP metadata. The fun will never end!

Conclusion

Overall, SCINI has been a total success during its first three years and the Elphel cameras played a significant role in this achievement. We completed 31 scientific dives, a number of technical dives, and reached a maximum depth of 301m (click here for more info!). The scientific data is still being analyzed, but papers about SCINI and its work are under development.

For more information on SCINI (check out the Youtube movie!!), images from the Antarctic, the technology we use, and our “Daily Slog” from the field, please post some comments or visit the following links:

- http://www.youtube.com/watch?v=RwPOiuVb4-A

- http://scini.mlml.calstate.edu/

- http://iceaged2010.mlml.calstate.edu/scini/

- http://www.desertstar.com

- http://antarcticsun.usap.gov/science/contenthandler.cfm?id=2092

David, thank you so much for your nice post, explaining what worked and what did not (at least out-of-the-box) in that exciting and challenging application.

With many other customers we can only indirectly know if the cameras work as expected when they stop updating email or mailing list postings with questions. But maybe that just means that some of them gave up on trying to use these cameras.

Last half year we were actively working on improving optical performance of the cameras (so far we were only concerned about electronics and code) while working on low-parallax single-release panoramic cameras, but some of these advances may benefit SCINI project. Additionally we have complete images in-camera conversion parameters embedded in the Exif MakerNote tag and have some software (ImageJ plugin and movie2dng). Such software allows to un-apply in-camera conversion (black level, gamma, analog gains) needed for effective compression of the images without loosing sensor pixel data and restoring the images so each pixel has higher than 8-bit range value proportional to the number of photons detected by the sensor. We also implemented more FPGA code to balance image size and noise filtering.

Andrey

Thanks for the tip! We will definitely look at the updated firmware.

1. ROV dives deep in Antarctic waters with Linux-based cams (Feb 5, 2015)

2. Vision-guided robot helps make new discoveries under the ice in Antarctica (Jan 28, 2015)